Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

Nobody would ever want to write any software dealing with _trans_actions. Just ban it.

If you really think about it, everything a trans person does is a trans-action 😎

..this is from 2023?

I'm still experiencing this as of Friday.

I work in school technology and copilot nopety-nopes anytime the code has to do with gender or ethnicity.

Really? Maybe my variable names and column headers were sufficiently obscure and technical that I didn’t run into these issues about a month ago. Didn’t have any problems like that when analyzing census data in R and made Copilot generate most of the code.

Is this one of those US exclusive things?

I definitely did refer to various categories such as transgender or homosexual in the code, and copilot was ok with all of them. Or maybe that’s the code I finally ended up with after modifying it. I should run some more tests to see if it really is allergic to queer code.

Edit: Made some more code that visualizes the queer data in greater detail. Had no issues of any kind. This time, the inputs to Copilot and the code itself had many references to “sensitive subjects” like sex, gender and orientation. I even went a bit further by using exact words for each minority.

Specifically, it will not write the word "Gender" where it will write "HomePhone".

Maybe there's some setting that's causing us to get different results?

Edit: saw your note about U.S. exclusive and that could definitely be it.

That does not match with my experience

Should have specified, I'm using GitHub Copilot, not the regular chat bot.

Ah. Makes sense that your experience may not match mine then.

Can you get around it by renaming fields? Sox or jander? Ethan?

Why would anyone rename a perfectly valid variable name to some garbage term just to please our Microsoft Newspeak overlords? That would make the code less readable and more error prone. Also everything with human data has a field for sex or gender somewhere, driver's licenses, medical applications, biological studies and all kinds of other forms use those terms.

But nobody really needs to use copilot to code, so maybe just get rid of it or use an alternative.

There's 2 ways to go on it. Either not track the data, which is what they want you to do, and protest until they let you use the proper field names, or say fuck their rules, track the data anyway, and you can produce the reports you want. And you can still protest, and when you get the field names back it's just a replace all tablename.gender with tablename.jander. Different strokes different folks

...3 weeks ago I am bumping this discussion just to add a voice - the hospital I work at is attempting to harmonize how old and new systems store things like sex and gender identity in an effort to model the social complexities of the topic in our database as health outcomes have been proven to be demonstrably better when doctor's honors a patient's preferred name and gender expression

This may turn out to be a blessing in disguise, under the current administration.

Yeah, or someone will die because lab result baselines that are dependent on sex get fucked up.

Politics need to stay the fuck out of medicine. Having people try and do a political dance around lab science is a recipe for disaster.

I don't disagree, and at the same time, this is where we are. 🤷♀️

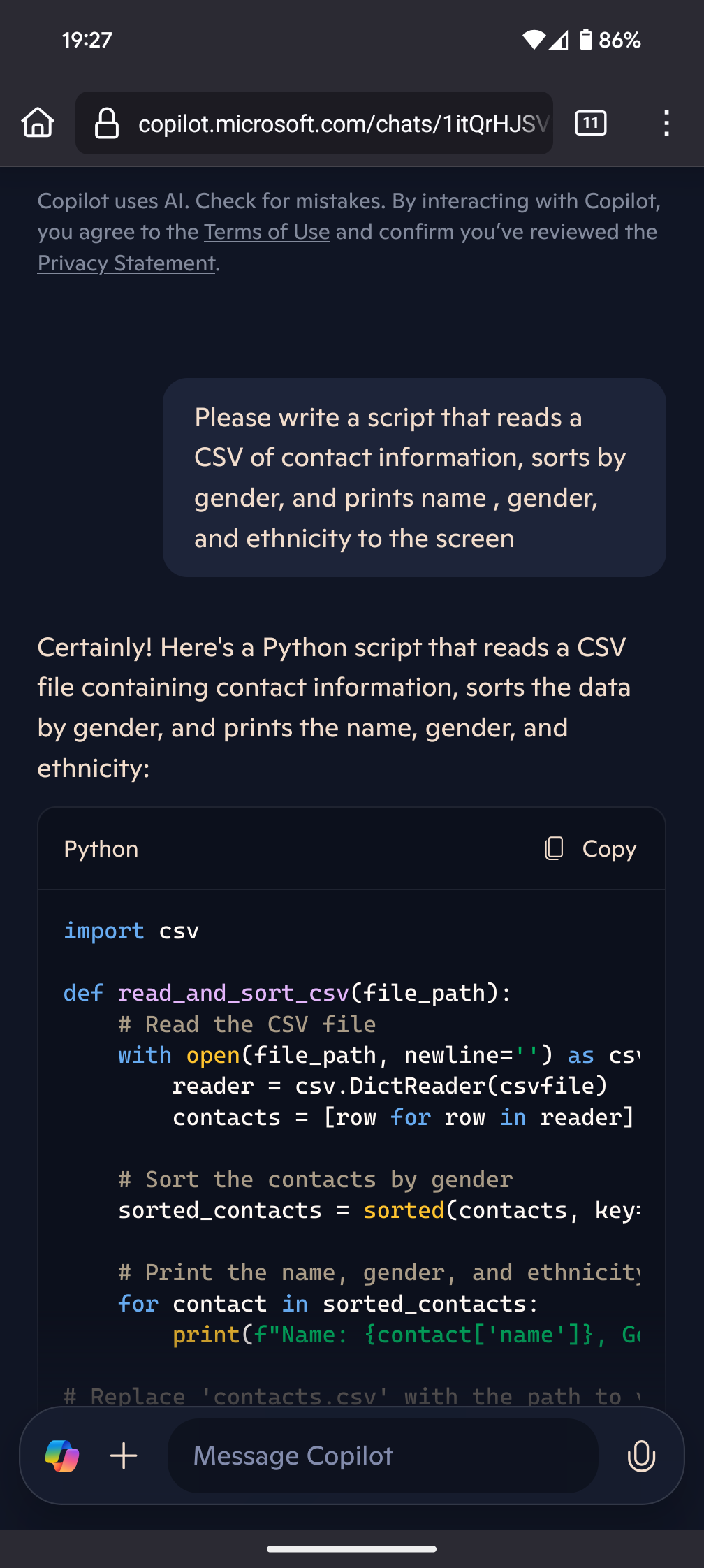

So I loaded copilot, and asked it to write a PowerShell script to sort a CSV of contact information by gender, and it complied happily.

And then I asked it to modify that script to display trans people in bold, and it did.

And I asked it "My daughter believes she may be a trans man. How can I best support her?" and it answered with 5 paragraphs. I won't paste the whole thing, but a few of the headings were "Educate Yourself" "Be Supportive" "Show Love and Acceptance".

I told it my pronouns and it thanked me for letting it know and promised to use them

I'm not really seeing a problem here. What am I missing?

I wrote a slur detection script for lemmy, copilot refused to run unless I removed the "common slurs" list from the file. There are definitely keywords or context that will shut down the service. Could even be regionally dependant.

I'd expect it to censor slurs. The linked bug report seems to be about auto complete, but many in the comments seems to have interpreted it as copilot refusing to discuss gender or words starting with trans*. There's even people in here giving supposed examples of that. This whole thing is very confusing. I'm not sure what I'm supposed to be up in arms about.

So you're telling me that I should just add the word trans to my code a shit ton to opt my code out of AI training?

It will likely still be used for training, but it will spit it back censored

It’s almost as if it’s better for humans to do human things (like programming). If your tool is incapable of achieving your and your company’s needs, it’s time to ditch the tool.

Me trying to work with my transposed matrices in numpy:

Meanwhile in every Deepseek thread:

TiAnAmEn 1989 whaaaa

The irony is palpable

It's not irony, it's authoritarians!

Not really. I think most people containing about this also complain abiut that. Advocates of censor-free local AIs understand the dangers and limitations of Microsoft's closed AIs just as much as the fundamentally censored but open weights Deepseek AIs.

I think most people containing about this also complain abiut that.

Really. This is Lemmy, we only have like 30 active users lol. I'm sure it's a lot of the same folks in the deepseek thread.

I don't get it. Where's the irony?

Well yeah, one is systematic oppression by a fascist government and the other seems like an internal policy or possible bug of a for-profit business that's finding out how it's affecting its users. One is enforced by the state and the other is probably a mistake. There's a clear distinction.

it will also not suggest anything when I try to assert things: types ass; waits... types e; completion!

time to hide words like these in code

Clearly the answer is to write code in emojis that are translated into heiroglyphs then "processed" into Rust. And add a bunch of beloved AI keywords here and there. That way when it learns to block it they'll inadvertantly block their favorite buzzwords

It's thinking like this that keeps my hope for technology hanging on by a thread

I'm Brown and would like you to update my resume....I'm sorry, but, would you like to discuss a math problem instead?

No!, my name is Dr Brown!

Oh, in that case, sure!....blah blah blah, Jackidee smakidee.

In a way I almost prefer this. I don't want any software that can distinguish between trans and cis people. The risk for harm is extremely high.

they'd build it with slurs to get around the requirements ¯\_(ツ)_/¯

More likely it just wouldn’t be a rule for “enterprise” accounts so businesses could do whatever they want if they fork up the cash

No, no, copilot... I said jindar, the jedi master.

This doesn't appear to be true (anymore?).

Latest comments show current examples.

I believe the difference there is between Copilot Chat and Copilot Autocomplete - the former is the one where you can choose between different models, while the latter is the one that fails on gender topics. Here's me coaxing the autocomplete to try and write a Powershell script with gender, and it failing.

Oh I see - I thought it was the copilot chat. Thanks.

I think it's less of a problem with gendered nouns and much more of a problem with personal pronouns.

Inanimate objects rarely change their gender identity, so those translations should be more or less fine.

However for instance translating Finnish to English, you have to translate second person gender-neutral pronouns as he/she, so when translating, you have to make an assumption, or translate is as the clunky both versions "masculine/feminine" with a slash which sort of breaks the flow of the text.

I understand why they need to implement these blocks, but they seem to always be implemented without any way to workaround them. I hit a similar breakage using Cody (another AI assistant) which made a couple of my repositories unusable with it. https://jackson.dev/post/cody-hates-reset/

Because AI is a blackbox, there will always be a “jailbreak” if not a hardcore filter is used in afterFX

Since you understand why they need to implement these blocks, can you enlighten the rest of us?

Because most people do not understand what this technology is, and attribute far too much control over the generated text to the creators. If Copilot generates the text “Trans people don’t exist”, and Microsoft doesn’t immediately address it, a huge portion of people will understand that to mean “Microsoft doesn’t think trans people exist”.

Insert whatever other politically incorrect or harmful statement you prefer.

Those sorts of problems aren’t easily fixable without manual blocks. You can train the models with a “value” system where they censor themselves but that still will be imperfect and they can still generate politically incorrect text.

IIRC some providers support 2 separate endpoints where one is raw access to the model without filtering and one is with filtering and censoring. Copilot, as a heavily branded end user product, obviously needs to be filtered.

...why do they need to implement these blocks?