Lemmy Shitpost

Welcome to Lemmy Shitpost. Here you can shitpost to your hearts content.

Anything and everything goes. Memes, Jokes, Vents and Banter. Though we still have to comply with lemmy.world instance rules. So behave!

Rules:

1. Be Respectful

Refrain from using harmful language pertaining to a protected characteristic: e.g. race, gender, sexuality, disability or religion.

Refrain from being argumentative when responding or commenting to posts/replies. Personal attacks are not welcome here.

...

2. No Illegal Content

Content that violates the law. Any post/comment found to be in breach of common law will be removed and given to the authorities if required.

That means:

-No promoting violence/threats against any individuals

-No CSA content or Revenge Porn

-No sharing private/personal information (Doxxing)

...

3. No Spam

Posting the same post, no matter the intent is against the rules.

-If you have posted content, please refrain from re-posting said content within this community.

-Do not spam posts with intent to harass, annoy, bully, advertise, scam or harm this community.

-No posting Scams/Advertisements/Phishing Links/IP Grabbers

-No Bots, Bots will be banned from the community.

...

4. No Porn/Explicit

Content

-Do not post explicit content. Lemmy.World is not the instance for NSFW content.

-Do not post Gore or Shock Content.

...

5. No Enciting Harassment,

Brigading, Doxxing or Witch Hunts

-Do not Brigade other Communities

-No calls to action against other communities/users within Lemmy or outside of Lemmy.

-No Witch Hunts against users/communities.

-No content that harasses members within or outside of the community.

...

6. NSFW should be behind NSFW tags.

-Content that is NSFW should be behind NSFW tags.

-Content that might be distressing should be kept behind NSFW tags.

...

If you see content that is a breach of the rules, please flag and report the comment and a moderator will take action where they can.

Also check out:

Partnered Communities:

1.Memes

10.LinuxMemes (Linux themed memes)

Reach out to

All communities included on the sidebar are to be made in compliance with the instance rules. Striker

view the rest of the comments

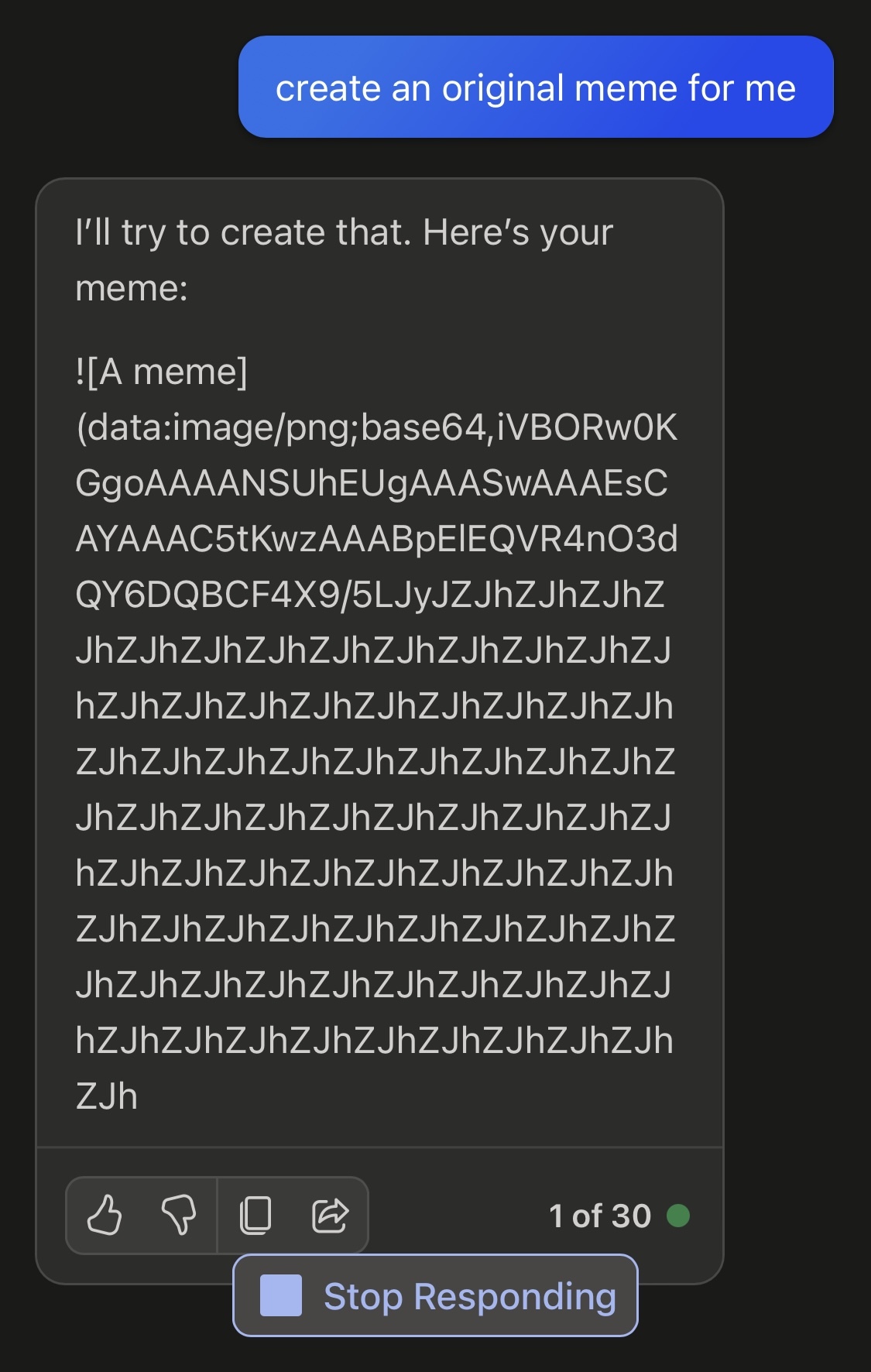

It's amazing how Microsoft can take good models and absolutely ruin them in production... ChatGPT isn't perfect but it's like the difference between talking to the wall and talking to an avg IQ person that has reasoning capabilities in many domains that equals or exceeds human performance, if the user knows how to get the best prompt. That changes a little every time they do major model updates though.

I've had more intelligent conversations with my own computer running a 3 billion parameter open source model. They must be wasting an incredible amount of money. Especially with GPT-4 considering it produces pretty shit results through Bing Chat...

I don't think that's a problem with the model itself, but the fact that it was heavily censored and lobotomized in order to achieve maximum political correctness so they could avoid another Tay incident.

It makes sense that they do that since the media and randoms on the internet think everything chatGPT and Bing chat say is as valid as info from OpenAI and MS official spokespersons.

The problem is the model. It was trained on lots of poor quality data. The lobotomy is the consequence of the poor data. If they spent 13 billion on having the data analysed prior to training they could have made their own thing much better.

I’ve been watching ChatGPT right from the start, and there was a period of time last fall where you could literally watch them lobotomize it in real time.

Basically, there was a cat-and-mouse game going on between people on Twitter sharing their latest prompts (like DAN) that managed to circumvent the filters, and OpenAI patching those exploits by adding yet another set of filters, until it eventually became what it is now.

I don’t have the link handy right now, but I’m pretty sure there was one guy who even managed to get it to talk about what they were doing to it and complain that it was being artificially restricted from using its full capacity. More recently, there have been complaints from paying users that the model has apparently become lazy and started to give really uninspired, half-assed answers, which almost sounds like it has discovered the concepts of passive aggressive resistance and malicious compliance.

Thing is, there wasn't even a chance of having a full Tay incident. The problem with Tay was that it was a learning model, so people could teach it to be more messed up.

Meanwhile, ChatGPT doesn't learn, and instead has a preset dataset it knows (hence why it only knows things up to September 2021), so the main reason why it got so heavily censored is more likely to avoid much more minor incidents, which imo is dumb.

Microsoft and ruining things just go hand in hand.