Strange, a second nickel just appeared in my hand.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

I got a dime. Also, the monkey's paw just curled another finger, but that's not important.

The person on reddit used a third party cable instead of the one supplied with the device.

https://www.reddit.com/r/nvidia/comments/1ilhfk0/rtx_5090fe_molten_12vhpwr/

It melted on both sides (PSU and GPU), which indicates it was probably the cable being the issue.

12VHPWR is a fucking mess, so please don't tempt fate with your expensive purchase.

What I've learned from this whole fiasco after owning a problem-free 4090 for over 2 years:

- Don't use 3rd party connectors, and don't use the squid adapter in the box. Use the 12VHPR cable that came with your PSU or GPU. If your PSU doesn't have a 12VHPR connection, get one that does.

- Don't bend the cable near the connection. Make sure your case is actually big enough to avoid bending.

- Make sure it's actually plugged in all the way. If you didn't hear a click, it's not plugged in all the way.

- Don't keep disconnecting the cable to check for burns. The connection is weak and designed to fail after only a handful of disconnect/reconnects. If you followed the 3 steps above perfectly, you have nothing to worry about.

That said, I'm skipping this GPU generation (and most likely the next one as well). Hopefully in 2-4 years AMD or Intel will be on more level grounds with nVidia so that I can finally stop giving them money just to have good ray tracing performance.

Strolls nervously through room with RX 580...

I got lucky and picked up a 7900 XTX for a reasonable price last gen and it's been a really great card. I've got a couple systems coming up on needing a refresh (1080 Ti and a 2080 Ti) and I'm planning on upgrading both of them to a 9070 XT. I'm staying away from Nvidia until they start pricing their GPUs at prices actual consumers can afford instead of corporations looking to build AI farms.

That's bad, but that aside - It might be time to consider alternate power delivery to these cards. The power they need should warrant having a standard c13 plug directly on em or something.

c13 plug

Who would've thought.... 3DFX was apparently ahead of its time...

Is this the fabled Bitchin' Fast 3d?

I love the IDE connector on the front of the card itself.

That IDE connector was for SLI...

A mini fusion drive, perhaps?

Power the house with it when not using the PC, but expect brown outs when gaming.

Four of the standard 8 pin PCIe power connectors would work well.

The new connector really should have used some large blade contacts to handle 50 amps.

So, when is the first party cable going to be launched? The device only hast an adapter shipped with it, which won't help you connect to the PSU 12VHPWR.

https://youtu.be/Ndmoi1s0ZaY?si=SWRjC4AgseXSmOrm

https://youtu.be/kb5YzMoVQyw?si=eSGnUbFv6OJ4jtEg

It's poor design on Nvidias part. There is no load balancing.

I really appreciate the specificity of the headline, rather than the clickbait it could have been.

Better would have been:

Handful of users complain about 3rd Party Cables Melting Their 5090FE.

Well, only a handful of users exist.

Alternate title:

Nvidia SLAMMED as 5090 GPUs start caching on FIRE

Well...I mean...that's kind of bound to happen when you draw 600W into a device that size I suppose. I feel like they've had this issue with every *090 card, whether it be cables or otherwise.

God damn... 600w?!

Is this thing supposed to double as space heater?

What do people do in the summer. That's got to cost monthly cash to run it and cool it. 20 bucks.?

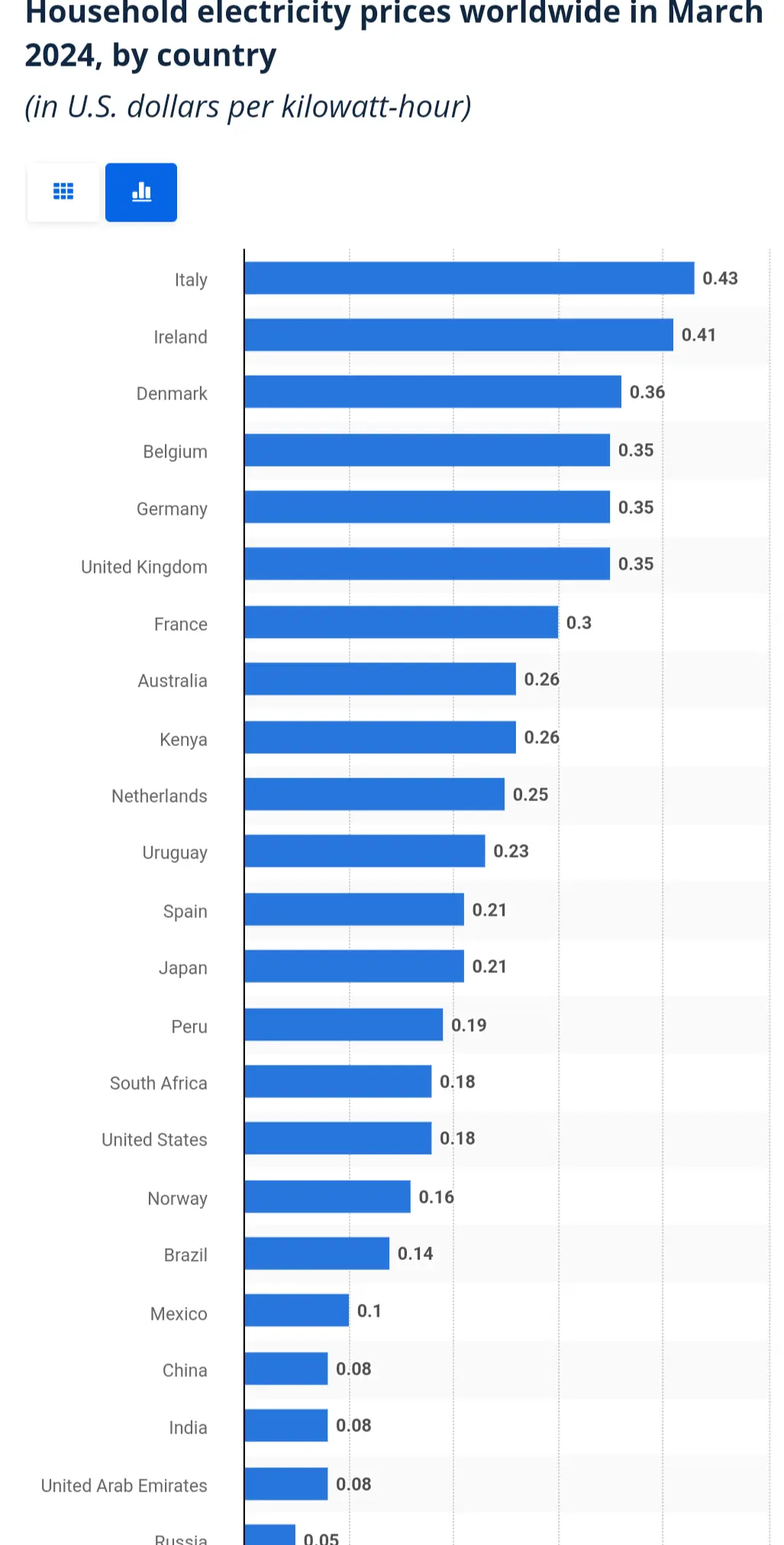

Running 600W for 12 hours a day at $0.10 per kWh costs $0.72 a day or $21.60 a month. Heat pumps can move 3 times as much heat as the electricity they consume, so roughly another $7.20 for cooling.

All electronics double as space heaters, there’s only a minuscule amount of electricity that’s not converted to heat.

Thank you for the good math!

10¢ a kWh is fucking cheap in a global context. 3x that is not uncommon.

https://www.statista.com/statistics/263492/electricity-prices-in-selected-countries/

Yup. Pretty dumb.

My +10 year old GTX780 would pull 300W at full tilt, and it has only a ridiculous fraction of the compute power. The radeon 6990 would pull +400W... high end GPUs have been fairly power hungry for literally more than a decade.

GTX 780 released in 2013?

RTX 3090 was 350W?

RTX 4090 was 450W?

So if by decades you mean this generation... then sure.

Haha yeah I mistyped the years, it was supposed to be +10 and not +20...nevertheless these cards have been pulling at least 3-400W for the past 15 years.

The 8800 Ultra was 170 watts in 06

The GTX 280 was 230 in 08.

The GTX 480/580 was 250 in 2010. But then we got the GTX 590 dual GPU which more or less doubled

The 680 was a drop, but then they added the TIs/Titans and that brought us back up to high TDP flagships.

These cards have always been high for the time, but quickly that became normalized. Remember when 95 watt CPUs were really high? Yeah that's a joke compared to modern CPUs. My laptops CPU draws 95 watts.

As it so happens around a decade ago there was period when they tried to make Graphics Cards more energy efficient rather than just more powerful, so for example the GTX 1050 Ti which came out in 2017 had a TDP of 75W.

Of course, people had to actually "sacrifice" themselves by not having 200 fps @ 4K in order to use a lower TDP card.

(Curiously your 300W GTX780 had all of 21% more performance than my 75W GTX1050 Ti).

Recently I upgraded my graphics card and again chose based on, amongst other things TDP, and my new one (whose model I don't remember right now) has a TDP of 120W (I looked really hard and you can't find anything decent with a 75W TDP) and, of course, it will never give me top of the range performance when playing games (as it so happens it's mostly Terraria at the moment, so "top of the range" graphics performance would be an incredible waste for it) as I could get from something 4x the price and power consumption.

When I was looking around for that upgrade there were lots of higher performance cards around the 250W TDP mark.

All this to say that people chosing 300W+ cards can only blame themselves for having deprioritized power consumption so much in their choice often to the point of running a space heater with jet-engine-level noise from their cooling fans in order to get an extra performance bump that they can't actually notice on a blind test.

High end GPUs are always pushed just past their peak efficiency. If you slightly underclock and undervolt them you can see some incredible performance per watt.

I have a 4090 that's underclocked as low as it will go (0.875v on the core, more or less stock speeds) and it only draws about 250 watts while still providing like 80%+ the performance of the card stock. I had an undervolt that went to about 0.9 or 0.925v on the core with a slight overclock and I got stock speeds at about 300 watts. Heavy RT will make the consumption spike to closer to the 450 watt TDP, but that just puts me back at the same performance as not underclocked because the card was already downclocking to those speeds. About 70 of that 250 watts is my vram so it could scale a bit better if I found the right sweet spot.

My GTX 1080 before that was under volted, but left at maybe 5% less than stock clocks and it went from 180w to 120 or less.

That's a good point and I need to start considering that option in any future upgrades.

Yeah pulling nearly 600w through a connector designed for 600w maximum just seems like a terrible idea. Where’s the margin for error?

Yeah my 3090 K|ngP|n pulls over 500w easily, but that's over 3 8 pin PCIe cables, all dedicated. Power delivery was something I took seriously when getting that card installed, as well as cooling. Made sure my 1300w PSU had plenty of dedicated PCIe ports.

I can really recommend both der8auers video and Buildzoids video on this. Der8auer has good thermal imaging of imbalanced current between the wires between two 12V HPWR plugs, and Buildzoid has the explanation why the current can't be balanced with the current setup on 5090s.

And it burns, burns, burns

The gpu of fire

The gpu of fire

I never get the newest thing, people who buy brand new stuff are just beta testers for the product imo

All that cost and this is what you get…

Are people improperly connecting them again?

The problem seems to be load balancing, or lack thereof. A German guy on YouTube noticed that his cable got up to 150°C at the PSU end, due to one wire delivering 20 or 22 amps, while the others were getting a lot less pumped through them. 22 Ampere is pretty much half the power draw of the card, through one wire instead of three if the load was properly balanced between them. That's why it ran so hot and melted to shit.

If you've ever worked on your car's 12 V electrics system, you'll know how thick the wires (and corresponding connector sizes) are for things like window defrosters that will run through a 20 or 30 amp fuse.

22 Ampere is pretty much half the power draw of the card, through one wire instead of three if the load was properly balanced between them.

Apparently the reference design only has one current shunt, so they can't even measure the imbalance. Madness.

Well, of course. What can you expect from a Chinese PCB that costs only 2500 USD, right?

1080ti days were just simpler times!

A handful? Wow that's gotta be like 50% of the 50 series out there!