You don’t. If you’re born on one side of the wall, that’s exactly where you’ll stay. DOGE decided that this way we can enhance the efficiency of all immigration, tourism and all travel related bureaucracy. No paperwork, no employees needed. So much cheaper that way.

While you’re at it, here’s an idea for the new name: Tyrannical Dictatorship of the Oppressed States of America.

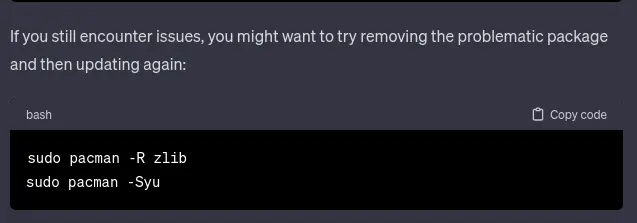

Unexpected Debian.

You could always rewrite the constitution so that it says Trump is the Eternal Leader of USA.

Best of all, since it’s “made in America”, all of that money will definitely support American manufacturers. None of that money will be going to China.

Latitude and clouds really matter in these calculations. Further up north, the light gets weaker, so you’ll need to compensate with more panels. Also, the sunny hours fluctuate wildly between the short days in winter and short nights in summer.

The article mentioned Birmingham, and in that case, solar is just one of the many power sources they’ll need. Solar can support the mix, and in the summer it could even dominate for a while. They’ll still need a lot more from other sources.

The closer to the equator you are, the more sense it makes to use solar power. In places like Germany, it’s already fine, in Greece it’s really good. Anywhere south of that, it’s clearly the best solution.

Oh, that’s so delightfully clever. Good job!

Makes things shorter.

In the applications mentioned by other people, you run into calculations that would look really messy and confusing. Things like 5•4•3•2•1 can be shorted to just 5! Imagine writing the full version of 123!

Janet’s tears weren’t just about loss; they were the raw, ugly realization that the system she’d championed had no loyalty to people like her spouse.

There’s the root cause. Next time, don’t expect the system to have any loyalty towards anyone.

So… cold war 2? Hybrid war? What should we call this?

The conversation probably went something like this:

Dude 1: Yo, I'm like, sky-high, man!

Dude 2: No way, bro! Where'd ya cop this fire?

Dude 1: Beats me, man. I was already blitzed when I scored it.

Dude 2: Duuuude, that's wicked!

Dude 1: Ayo, listen up, I just had this like, mind-blowing epiphany. Check it, bro. This is gonna flip the script, man. Let's slap up a fence right at the entrance to the parking lot.

Dude 2: Totally, let's make it happen. It'll be like, the ultimate vibe killer for the fuzz, man. They'll never see it comin'. Plus, think about the street cred we'll get. We'll be legends, man. Legends!

Boys went and slapped up a fence right there, and stopped thinking about it any further. Who needs brains when you’re total legend.

So, not a lot of anime stuff in China? Why would there be any? That’s a Japanese thing, so obviously Chinese people couldn’t care less.