Is the plan for AI to give tech plausible deniablity when it lies about politics and other mis/dis information?

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

AI has its uses: I would love to read AI written books in fantasy games (instead of the 4 page books we currently have) or talk to a AI in the next RPG game, hell it might even make better random generated quests and such things.

You know, places where hallucinations don't matter.

AI as a search engine only makes sense when/if they ever find a way to avoid hallucinations.

That's probably why I end up arguing with Gemini. It's constantly lying.

Sounding plausible is all they're trained for. The whole setup was designed to avoid wishy-washy "I don't know" responses. Which is great, if you want to ask what Lord Of The Rings would sound like if Eminem wrote it, but we're treating that improv exercise like a magic eight-ball.

The most frustrating part of it is seeing people expect something else. And I'm including commenters here. 'I told the word-guessing machine to stop guessing words, and it just guessed more words!' 'Why doesn't the text robot know which icons this program has?' Folks, hallucination is the a technical term, and it's the function that makes this work at all. Everything it does is hallucinated. Some of that happens to match reality. Reality tends to be plausible.

The problem in full is that it's shoved onto the public and presented as science fiction. As if Google submits your questions to the Answertron 5000 which spits out a little receipt printed in all-caps. But there's no good-old-fashioned if-then logic involved. It's algebra soup. We gave up trying to be clever and did whatever got results. Now you can ask for anything you want, and it's usually close enough.

You can also ask how tall the king of Madeupistan is, and it'll probably play along.

Playing along is how it works.

This specific task, picking up info from a text, is broadly similar to an early illustrative fuckup: acronyms. GPT3-ish struggled to get acronyms right when it was told to write fiction. It'd make up the name of some institution or company, and then drop a parenthetical which almost matched those initial letters. Something like "meanwhile, the Bureau of Silly Names (BNS) says..."

It's a simple task. The machine knows what a right answer looks like. But it's only guessing, and if it guesses wrong, it goes 'oh well' and plows ahead.

Identifying the source of an article is very different from the common use case for search engines.

1:1 quotes of web pages is something conventional search engines are very good at. But usually you aren't quoting pages 1:1.

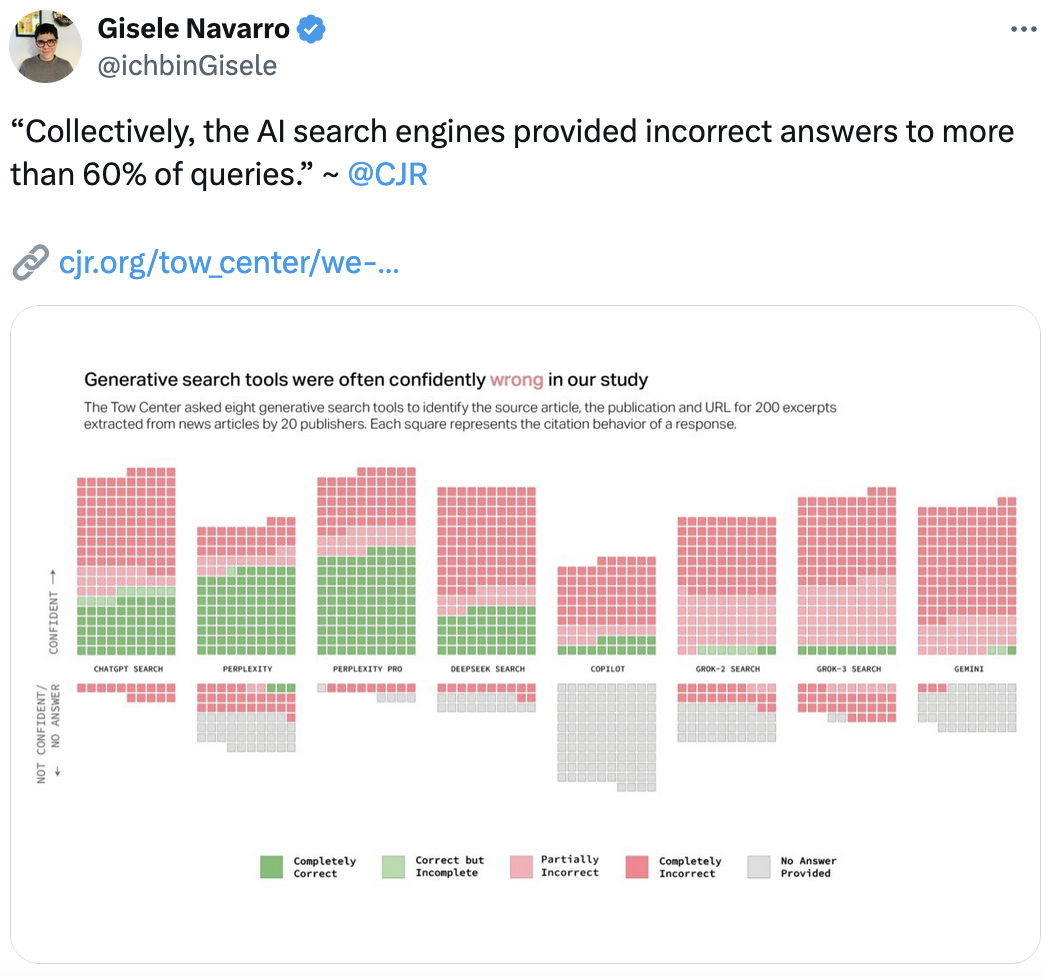

I like how when you go pro with perplexity, all you get is more wrong answers

That's not true, it looks like it does improve. More correct and so-so answers.

Go figure, the one providing sources for answers was the most correct...But pretty wild how it basically leaves the others in the dust!

AI can be a load of shite but I’ve used it to great success with the Windows keyboard shortcut while I’m playing a game and I’m stuck or want to check something.

Kinda dumb but the act of not having to alt-tab out of the game has actually increased my enjoyment of the hobby.