So I was playing around with some coding models and getting disappointed in the responses. I started using starcoderplus-guanaco-gpt4, and after some tinkering I just wanted to share the importance of formatting your prompt correctly

I asked to provide a way to rate limit a function in python based on the input to the function so that it doesn't repeat identical output too often

I used the following prompt:

Create a python function that takes a string as input and prints that string. The function should be rate limited so that any specific string is not printed more than once every two minutes. This means it must keep track of the last time that it printed a specific string.

However, I used it in the chat-completion UI of text-generation-webui, and this was the useless reply I got:

Obviously completely useless to me

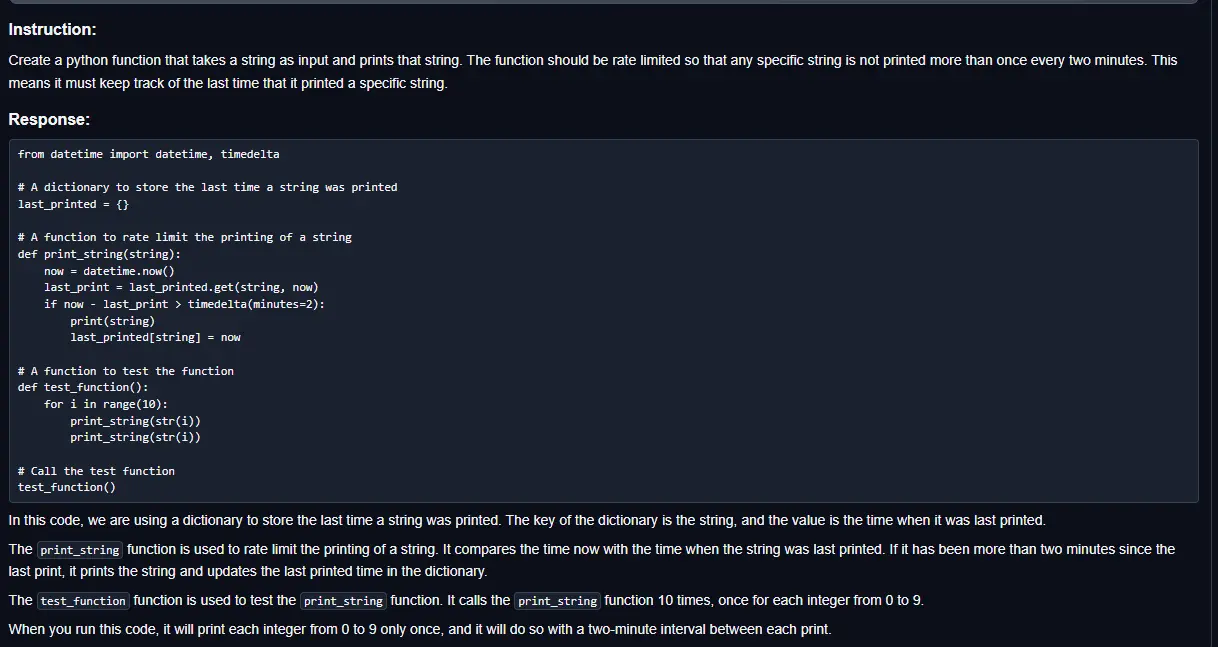

But then I realized that this model expects to follow instructions, not a chat, so I went over to the instruction template so now this was my "prompt":

### Instruction: Create a python function that takes a string as input and prints that string. The function should be rate limited so that any specific string is not printed more than once every two minutes. This means it must keep track of the last time that it printed a specific string.

### Response:

And lo and behold, a very competent useful reply!

As you can see, even if you follow the proper concept for instruct (providing it as instructions 'Create a python function that..' rather than 'I need a function that..'), you still need to be sure to follow the proper template structure.

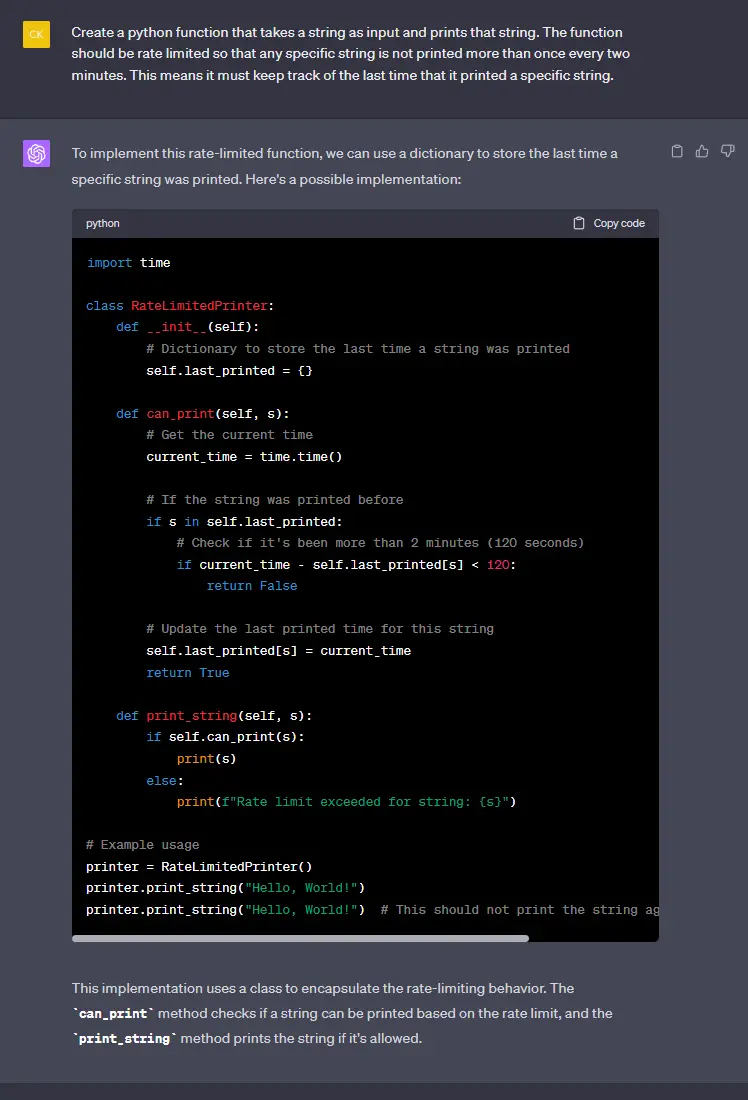

And most interestingly of all, giving the same prompt to chatgpt gets what I consider to be a worse answer:

It's very similar but to my eye distinctly overengineered, I find the solution from starcoder much more closely answers my question with only a couple lines of code to change my existing function. YMMV, but the TLDR is that you should make sure to follow the proper prompt and template formats to get the best replies from your model