this post was submitted on 23 Jan 2024

522 points (98.7% liked)

linuxmemes

25706 readers

121 users here now

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack users for any reason. This includes using blanket terms, like "every user of thing".

- Don't get baited into back-and-forth insults. We are not animals.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn, no politics, no trolling or ragebaiting.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

5. 🇬🇧 Language/язык/Sprache

- This is primarily an English-speaking community. 🇬🇧🇦🇺🇺🇸

- Comments written in other languages are allowed.

- The substance of a post should be comprehensible for people who only speak English.

- Titles and post bodies written in other languages will be allowed, but only as long as the above rule is observed.

6. (NEW!) Regarding public figures

We all have our opinions, and certain public figures can be divisive. Keep in mind that this is a community for memes and light-hearted fun, not for airing grievances or leveling accusations. - Keep discussions polite and free of disparagement.

- We are never in possession of all of the facts. Defamatory comments will not be tolerated.

- Discussions that get too heated will be locked and offending comments removed.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't remove France.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

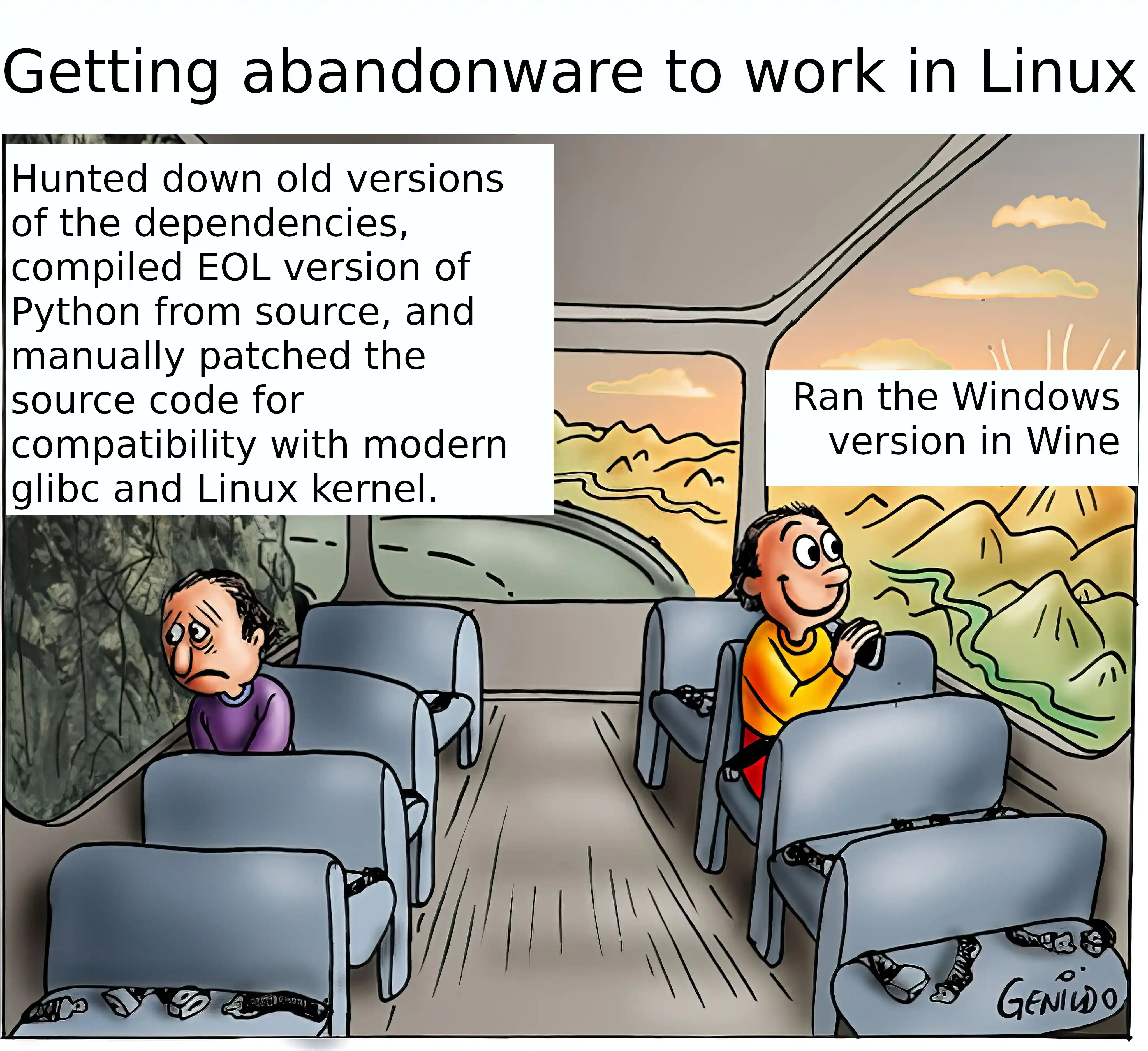

One day someone's posts how all Linux programs run forever and Windows creates abandonware

Another day someone complains about the Linux version of his program not tuning anyone so he has to use the Windows version

I'm not sure what's going on anymore

In the unix world, truly great programs tend to stay around for ever.

lesshas been around since 1983.grepwas there ten years earlier. Linux users lovevim. What does the "v" stand for, you ask? "Visual", of course, because it was one of the first text editors to offer support for computer monitors. And before that, when we had teletypes, people useded, which still comes pre-installed with Ubuntu. Not to mention that the modern linux terminal is basically emulating (that's why we called them terminal emulators) an electronic typewriter with some extra extensions for color and cursor support. They're backwards compatible to this day. That's why it says tty (teletype) when you pressctrl-alt-F2.The caveat is that these examples are all low-level programs that have few dependencies. And they are extremely useful, therefore well-maintained. When it comes to more complex programs with a lot of dependencies, unless there is someone to keep it updated with the latest versions of those dependencies, it will eventually get broken.

The reason this happens less often in W*ndows is because w*ndows historically hasn't had a package manager, forcing devs to bundle all their dependencies into the executables. Another part of the reason is that m*cros*ft would lose a lot of business customers if they broke some obscure custom app with a new update, so they did their best to keep everything backwards compatible. Down to the point of forbidding you from creating a file named

AUXin order to keep support for programs written for qdos, an OS from before filesystems were invented.Thanks that's pretty informative

Why isn't there a way for Linux users to automatically install every missing dependency for a program? Not sure if this will net me a ban here but the W*ndows way kind of looks superior here. Having old programs break with updates is a massive pain.

Great question! There is. What you’re describing is a package manager. Overall, they are a great idea. It means devs can create smaller “dynamically linked” executables that rely on libraries installed by the package manager. The w*ndows equivalent of this is using DLL’s. Another advantage is that urgent security updates can be propagated much faster, since you don’t have to wait for each app that uses a vulnerable library to update it on their own. Also, dynamically linked executables can help save on ram usage. With statically linked executables, everyone brings their own versions of some library, all off them off by a few minor revisions (which all have to be loaded into ram separately), whereas a bunch of dynamically linked executables can all pointed to the same version (only needs to be loaded once), which is what package maintainers often do. Finally, package managers eradicate the need for apps to include their own auto-updaters, which benefits both developers and users.

This model goes wrong when software depends on an outdated library. Even if the package maintainers still provide support for that outdated version, often it’s difficult to install two wildly different versions of a library at the same time. And apart from libraries, there are other things that a program can depend on, such as executables and daemons (aka background processes aka services), old versions of which are often even more difficult to get running along with their modern counterparts.

So when you say that the "W*ndows way kind of looks superior here", you are right about the specific edge case of running legacy apps. It just happens that the Linux crowd has historically decided that the other benefits of package managers outweigh this disadvantage.

There are tools for developers to bundle dependencies. Statically linked binaries, "portable" apps, AppImage, and so on... It's just that package managers are so widespread (because of the aforementioned benefits), few developers bother with these. The general attitude is "if you want a statically linked executable, go compile it yourself". And by the time it's time to make an "archiveable" version of an app because it's abandoned... nobody bothers, because it's, well, abandoned.

However, as disk capacity and ram size steadily increase, people are starting to question whether the benefits of traditional package managers really outweigh the added maintenance cost. This, combined with the recent development of a linux kernel feature called "namespaces", has spawned various new containerization tools. The ones I am familiar with are Docker (more suited for developer tools and web services), and Flatpak (more suitable for end-user desktop apps). I personally use both (flatpak as a user, and docker as both a user and a developer), and it makes my life a whole lot easier.

As for what makes it easier for users to get old apps working (which is what you're asking), well... that's sort of what we are discussing in this thread. Again, these tools aren't very widespread, because there is rarely a practical reason for running legacy programs, other than archivism or nostalgia. More often than not, modern and maintained alternatives are available. And when their is a practical reason, it is often in the context of development tools, where the user is probably skilled enough to just Dockerize the legacy program themselves (I did this a couple times at a job I used to have).

There's pros and cons. On one hand, packing your dependencies into your executable leads to never having to worry about broken dependencies, but also leads you into other problems. What happens when a dependency has a security update? Now you need an updated executable for every executable that has that bundled dependency. What if the developer has stopped maintaining it and the code is closed source? Well, you are out of luck. You either have the vulnerability or you stop using the program. Additionally bundling dependencies can drastically increase executable size. This is partially why C programs are so small, because they can rely on glibc when not all languages have such a core ubiquitous library.

As an aside, if you do prefer the bundled dependency approach, it is actually available on Linux. For example, you can use appimages, which are very similar to a portable exe file on windows. Of course, you may run afoul of the previously mentioned issues, but it may be an option depending on what was released.

Do you happen to know what (if any?) technical advantages appimage has over "portable" applications (i.e. when the app is distributed as a zipped directory containing the executable, libraries, and all other resources)"? As far as I understand, appimage creates an overlay filesystem that replaces/adds your system libraries with the libraries that the packaged app needs? But why would that be necessary if you can just put them in a folder along with the executable and override

LD_LIBRARY_PATH?Thanks

There is; actually there are several. Every^* distribution has a package manager, that's what it does. But you have to make a package for the program, similar to what the tegaki folks have done for Mac and Windows.

Another option is to statically link everything.

One issue is the fragmentation; because there are so many Linux distributions, it's hard to support packages for all of them. This is one thing that flatpack aims to solve.

I would expect this to be an issue for old closed-source software, but not for old free software. Usually there's someone to maintain packages for it.

Some cursory searching shows no tegaki package on flathub or in nix (either of these can be used on any distro; the nix one is surprising to me; it hosts soooo many packages).

But I do see it in Debian: https://packages.debian.org/search?suite=default§ion=all&arch=any&searchon=names&keywords=tegaki

I'm just getting started with nix, if I'm understanding it correctly I think that is kind of what nix package manager does? It keeps packages and their versions separate and doesn't delete them, so that you can update some programs and their dependencies without breaking other programs that depend on other versions of those same dependencies. https://www.linux.com/news/nix-fixes-dependency-hell-all-linux-distributions/

Yep, that's the gist. Nix build is reasonably good at spitting out what's missing ( if your packaging a random git ) and nix-init gives you a great starting point, but generally will need some tweaking to get the package running / installing.