this post was submitted on 30 Jun 2023

135 points (88.6% liked)

Standardization

470 readers

1 users here now

Professionals have standards! Community for all proponents, defenders and junkies of the Metric (International) system, the ISO standards (including ISO 8601) and other ways of standardization or regulation!

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

The system was intentionally designed to have a higher granularity than the existing Romer system and was kept even after Celsius designed his system because of the higher granularity. That is what the person you replied to is getting at. There is much more granularity in what the temperature is (in whole values) in the Fahrenheit scale than in the celsius scale. Yes, you can depict any temperature of either as the other, but as a random example, 123 degrees Fahrenheit is 50.55556 celsius. Which is easier to read? The whole, Fahrenheit number

Every measurement unit needs to go into decimal places where precision matters. If you are recording a temperature in Celcius to 5 decimal places, that temperature in Farenheit will also have 5 decimal places. You just happened to pick an edge case where all those decimal places in F are 0. You could have instead picked, for e.g. 26.52348C = 79.742264F.

That is not the point of what is being stated. I even made the point that you can still depict any and all temperatures in either scale (one is not more or less precise than the other). It is that Fahrenheit is specifically designed to give you MORE whole numbers to work with over most temperature ranges (when he designed it, the Celsius system did not exist, and the Romer system was used. This had water freeze at 7.5, body temp at 22.5, and water boiling at 60 degrees. Fahrenheit made the conscious decision to space the range out more (specifically multiply the existing range by 4) to remove more of the common fractional numbers for common temperatures.

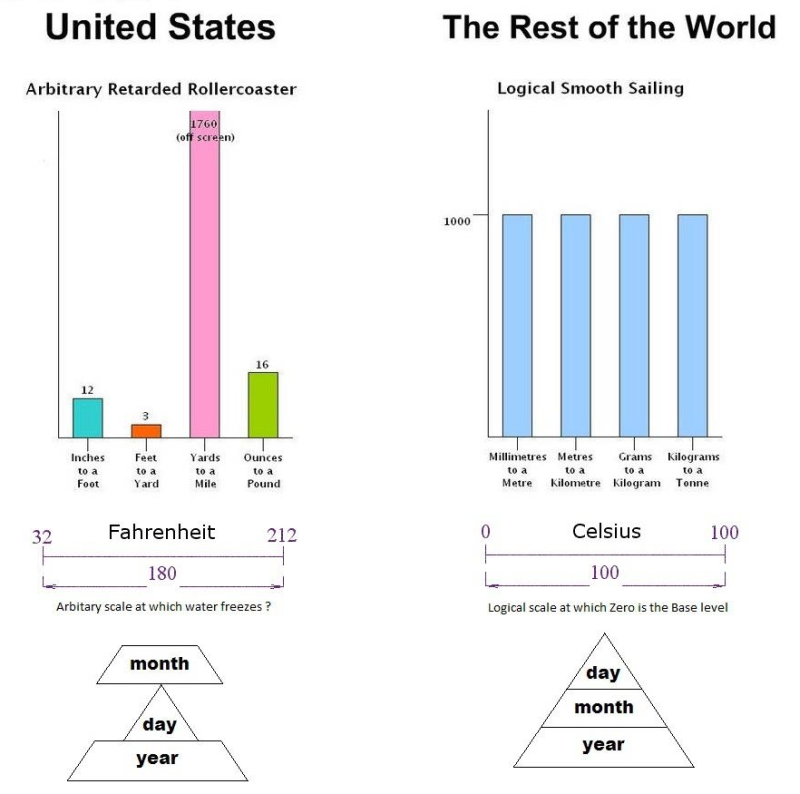

Celsius came after Romer and Fahrenheit were created, and the Fahrenheit scale is unarguably more fine grained than Celsius. You get 180 degrees to capture the temperature envelope of Solid-->Gaseous water. With Celsius you only get 100 (we are talking whole degrees here. Normal human beings do not read their outside thermometer to the 4th decimal point, assuming they for some reason had one that precise).

I am not making a case for the imperial system. I think its poorly designed and a base 10 system makes better sense in almost every application, but the Fahrenheit scale was designed to give you a more fine grained approach to temperatures in whole numbers, and it accomplishes exactly that. Neither me, nor the OP I replied to were talking about the precision of either system. They are measures of energy, and any temperature scale can capture that energy precisely, it just may not be a neat whole number, and instead some 8 decimal beast. No less precise, just way harder for humans without calculators to work with and regularly use

Edit: mistyped the boiling point of water as the difference between 212 and 32 which is 180

I understand your point that F has more whole numbers in the "habitable range". I'm just skeptical about the importance of that fact. We don't usually need precise temperatures in everyday life, so we tend to talk in whole numbers. But I don't think people have any trouble using decimal places in applications where the extra precision is needed.