this post was submitted on 05 Feb 2025

313 points (82.3% liked)

Technology

71537 readers

4005 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

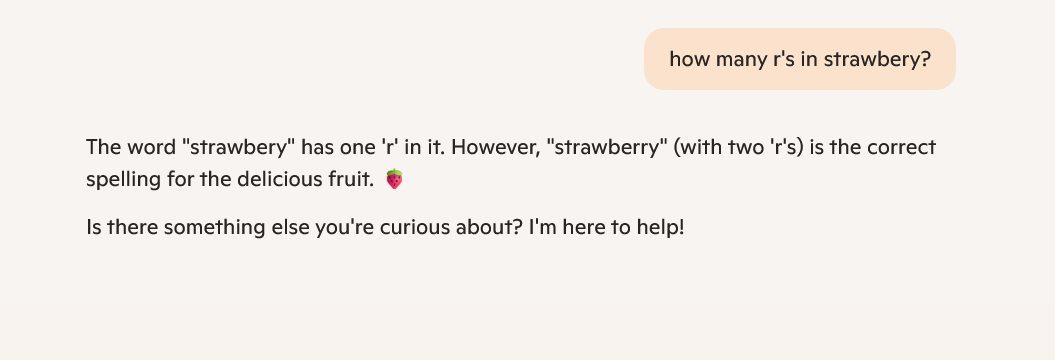

This is exactly the problem, though. They don’t have “intelligence” or any actual reasoning, yet they are constantly being used in situations that require reasoning.

Maybe if you focus on pro- or anti-AI sources, but if you talk to actual professionals or hobbyists solving actual problems, you'll see very different applications. If you go into it looking for problems, you'll find them, likewise if you go into it for use cases, you'll find them.

Personally I have yet to find a use case. Every single time I try to use an LLM for a task (even ones they are supposedly good at), I find the results so lacking that I spend more time fixing its mistakes than I would have just doing it myself.

So youve never used it as a starting point to learn about a new topic? You've never used it to look up a song when you can only remember a small section of lyrics? What about when you want to code a block of code that is simple but monotonous to code yourself? Or to suggest plans for how to create simple sturctures/inventions?

Anything with a verifyable answer that youd ask on a forum can generally be answered by an llm, because theyre largely trained on forums and theres a decent section the training data included someone asking the question you are currently asking.

Hell, ask chatgpt what use cases it would recommend for itself, im sure itll have something interesting.

No. I've used several models to "teach" me about subjects I already know a lot about, and they all frequently get many facts wrong. Why would I then trust it to teach me about something I don't know about?

No, because traditional search engines do that just fine.

See this comment.

I guess I've never tried this.

Kind of, but here's the thing, it's rarely faster than just using a good traditional search, especially if you know where to look and how to use advanced filtering features. Also, (and this is key) verifying the accuracy of an LLM's answer requires about the same about of work as just not using an LLM in the first place, so I default to skipping the middle-man.

Lastly, I haven't even touched on the privacy nightmare that these systems pose if you're not running local models.

What situations are you thinking of that requires reasoning?

I've used LLMs to create software i needed but couldn't find online.

Creating software is a great example, actually. Coding absolutely requires reasoning. I’ve tried using code-focused LLMs to write blocks of code, or even some basic YAML files, but the output is often unusable.

It rarely makes syntax errors, but it will do things like reference libraries that haven’t been imported or hallucinate functions that don’t exist. It also constantly misunderstands the assignment and creates something that technically works but doesn’t accomplish the intended task.

I think coding is one of the areas where LLMs are most useful for private individuals at this point in time.

It's not yet at the point where you just give it a prompt and it spits out flawless code.

For someone like me that are decent with computers but have little to no coding experience it's an absolutely amazing tool/teacher.