Having trouble with quotes here **I do not find likely that 25% of currently existing occupations are going to be effectively automated in this decade and I don’t think generative machine learning models like LLMs or stable diffusion are going to be the sole major driver of that automation. **

- I meant 25% of the tasks, not 25% of the jobs. So some combination of jobs where AI systems can do 90% of some jobs, and 10% of others. I also implicitly was weighting by labor hour, so if 10% of all the labor hours done by US citizens are driving, and AI can drive, that would be 10% automation. Does this change anything in your response?

No. Even if Skynet had full control of a robot factory, heck, all the robot factories, and staffed them with a bunch of sleepless foodless always motivated droids, it would still face many of the constraints we do. Physical constraints (a conveyor belt can only go so fast without breaking), economic constraints (Where do the robot parts and the money to buy them come from? Expect robotics IC shortages when semiconductor fabs’ backlogs are full of AI accelerators), even basic motivational constraints (who the hell programmed Skynet to be a paperclip C3PO maximizer?)

- I didn't mean 'skynet'. I meant, AI systems. chatGPT and all the other LLMs are an AI system. So is midjourney with controlnet. So humans want things. They want robots to make the things. They order robots to make more robots (initially using a lot of human factory workers to kick it off). Eventually robots get really cheap, making the things humans want cheaper and that's where you get the limited form of Singularity I mentioned.

At all points humans are ordering all these robots, and using all the things the robots make. An AI system is many parts. It has device drivers and hardware and cloud services and many neural networks and simulators and so on. One thing that might slow it all down is that the enormous list of IP needed to make even 1 robot work and all the owners of all the software packages will still demand a cut even if the robot hardware is being built by factories with almost all robots working in it.

**I just think the threat model of autonomous robot factories making superhuman android workers and replicas of itself at an exponential rate is pure science fiction. **

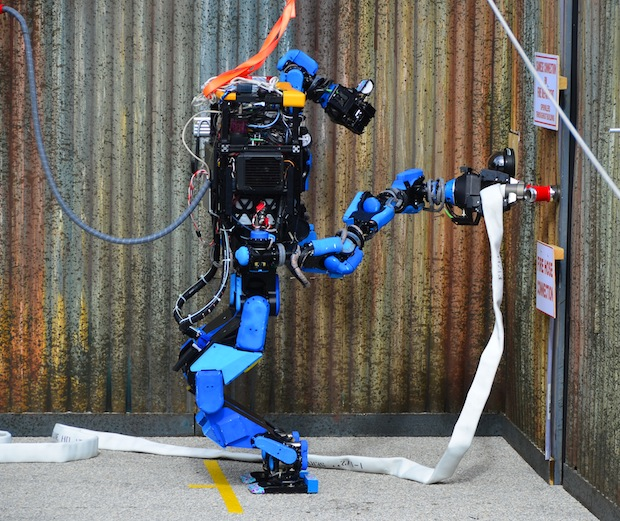

- So again that's a detail I didn't give. Obviously there are many kinds of robotic hardware, specialized for whatever task they do, and the only reason to make a robot humanoid is if it's a sexbot or otherwise used as a 'face' for humans. None of the hardware has to be superhuman, though obviously industrial robot arms have greater lifting capacity than humans. Just to give a detail what the real stuff would look like : most robots will be in no way superhuman in that they will lack sensors where they don't need it, won't be armored, won't even have onboard batteries or compute hardware, will miss entire modalities of human sense, cannot replicate themselves, and so on. It's just hardware that does a task, made in factory, and it takes many factories with these machines in it to make all the parts used.

think:

Software you write can have a "belief" as well. The course I took on it had us write Kalman filters, where you start with some estimate of a quantity. That estimate is your "belief", and you have a variance as well.

Each measurement you have a (value, variance) where the variance is derived from the quality of the sensor that produced it.

It's an overloaded word because humans are often unwilling to update their beliefs unless they are simple things, like "I believe the forks are in the drawer to the right of the sink". You believe that because you think you saw them their last. There is uncertainty - you might have misremembered, as your own memory is unreliable, your eyes are unreliable. If it's your kitchen and you've had thousands of observations, your belief has low uncertainty, if it's a new place your belief has high uncertainty.

If you go and look right now and the forks are in fact there you update your beliefs.