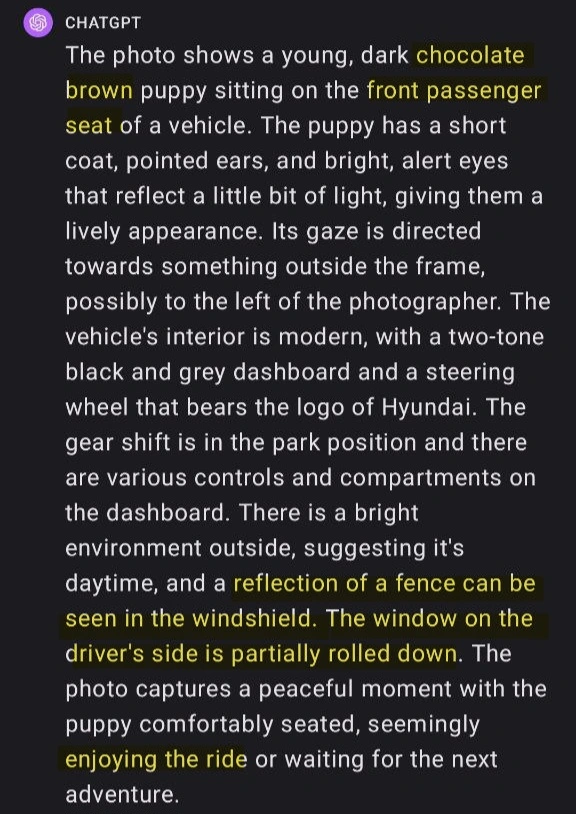

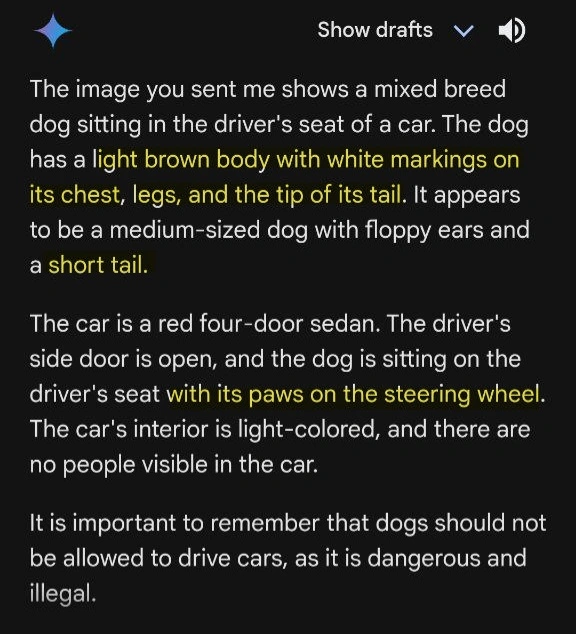

Edit: After reading the discussion, I figured I'd let GPT4 speak for itself:

The quest to identify true artificial intelligence (AI) indeed presents challenges, especially as AI models become more sophisticated. Let's explore some indicators that researchers and practitioners consider when assessing AI systems:

-

Understanding Context and Meaning:

- True AI should demonstrate an understanding of context and meaning. It should not merely generate plausible-sounding sentences but also comprehend the content it produces.

- A system that can engage in nuanced conversations, infer intent, and provide relevant responses based on context would be a strong indicator of advanced AI.

-

Generalization and Adaptability:

- An AI system that can generalize from its training data and adapt to novel situations is valuable.

- True AI should not be limited to memorizing specific examples but should exhibit flexibility in handling diverse scenarios.

-

Creativity and Novelty:

- Creativity is a hallmark of human intelligence. An AI system that generates novel ideas, art, or solutions beyond its training data suggests progress.

- For instance, AI-generated poetry, music, or visual art that resonates with human emotions could be an indicator.

-

Ethical Decision-Making:

- True AI should navigate ethical dilemmas. It should consider consequences, fairness, and societal impact.

- An AI system that can weigh trade-offs and make ethical choices aligning with human values is a positive sign.

-

Self-Awareness and Self-Improvement:

- While self-awareness akin to human consciousness remains elusive, an AI system that can self-assess its performance and seek improvement is promising.

- Continuous learning, adaptation, and self-correction are essential traits.

-

Transparency and Explainability:

- True AI should be transparent about its decision-making process. It should provide explanations for its outputs.

- Efforts to develop interpretable AI models and techniques for explaining their predictions contribute to trustworthiness.

-

Robustness and Safety:

- AI systems must handle adversarial inputs, noisy data, and edge cases gracefully.

- Robustness against attacks and safety precautions to prevent harmful behavior are critical indicators.

-

Human-AI Collaboration:

- Rather than replacing humans, true AI should complement human abilities.

- Systems that collaborate effectively with humans—such as AI assistants, co-creative tools, or medical diagnostics—demonstrate progress.

-

Quantifiable Metrics:

- Researchers propose various metrics to assess AI capabilities, such as perplexity, BLEU score, F1 score, and accuracy.

- However, no single metric captures the full spectrum of intelligence.

-

Detection Tools:

- Researchers are developing tools to differentiate between human- and AI-generated text.

- These tools analyze features like fluency, word frequency, punctuation patterns, and sentence length¹.

- While imperfect, they contribute to identifying AI-generated content.

In summary, the arrival of true AI may not be a singular event but a gradual evolution marked by advancements in the above dimensions. As AI continues to progress, our understanding of its capabilities will evolve, and new indicators may emerge.

Source: Conversation with Bing, 2/29/2024 (1) How to spot AI-generated text | MIT Technology Review. https://www.technologyreview.com/2022/12/19/1065596/how-to-spot-ai-generated-text/. (2) Intelligent Supertrend (AI) - Buy or Sell Signal — Indicator by .... https://www.tradingview.com/script/q9244PAH-Intelligent-Supertrend-AI-Buy-or-Sell-Signal/. (3) Indicators - True ALGO. https://truealgo.com/indicators/. (4) Improve Key Performance Indicators With AI - MIT Sloan Management Review. https://sloanreview.mit.edu/article/improve-key-performance-indicators-with-ai/. (5) New AI classifier for indicating AI-written text - OpenAI. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text/.