In 2018, I spent two days at Facebook's Menlo Park campus doing back-to-back on-the-record interviews with executives who worked on the company's content policy teams. This was after we had published article after article exposing the many shortcomings of Facebook's rules, based on internal guidebooks that were leaked to Joseph. We learned, for example, that Facebook would sometimes bend its rules to comply with takedown requests from governments that were threatening to block the service in their country, that Facebook had drawn an impossible-to-define difference between "white supremacy," "white nationalism," and "white separatism" that didn't stand up to any sort of scrutiny, and that it had incredibly detailed rules about when it was allowable to show a Photoshopped anus on the platform.

After months of asking for interviews with its top executives, Facebook's public relations team said that, instead, I should fly to Menlo Park and sit in on a series of meetings about how the rules are made, how the team dealt with difficult decisions, how third party stakeholders like civil liberties groups are engaged, and how particularly difficult content decisions were escalated to Sheryl Sandberg.

SPONSORED

__This segment is a paid ad. If you 're interested in advertising, _let 's talk _ .

Your Personal Data May Not Be Hidden. Take Control with DeleteMe.

Don't want just anyone finding your information on Search Engines? Remove your personal information from Data Brokers using DeleteMe.

One of the people I interviewed while at Facebook headquarters was Guy Rosen, who was then Facebook's head of product and is now its chief information security officer. I interviewed Rosen about how it could be possible that Facebook had failed so terribly at content moderation in Myanmar that it was being credibly accused of helping to facilitate the genocide of the Rohingya people. What Rosen told me shocked me at the time, and is something that I think about often when I write about Facebook. Rosen said that Facebook's content moderation AI wasn't able to parse the Burmese language because it wasn't a part of Unicode, the international standard for text encoding. Besides having very few content moderators who knew Burmese (and no one in Myanmar), Facebook had no idea what people were posting in Burmese, and no way to understand it: "We still don't know if it's really going to work out, due to the language challenges," Rosen told me. This was in 2018; Facebook had been operating in Myanmar for seven years and had at that time already been accused of helping to facilitate this human rights catastrophe.

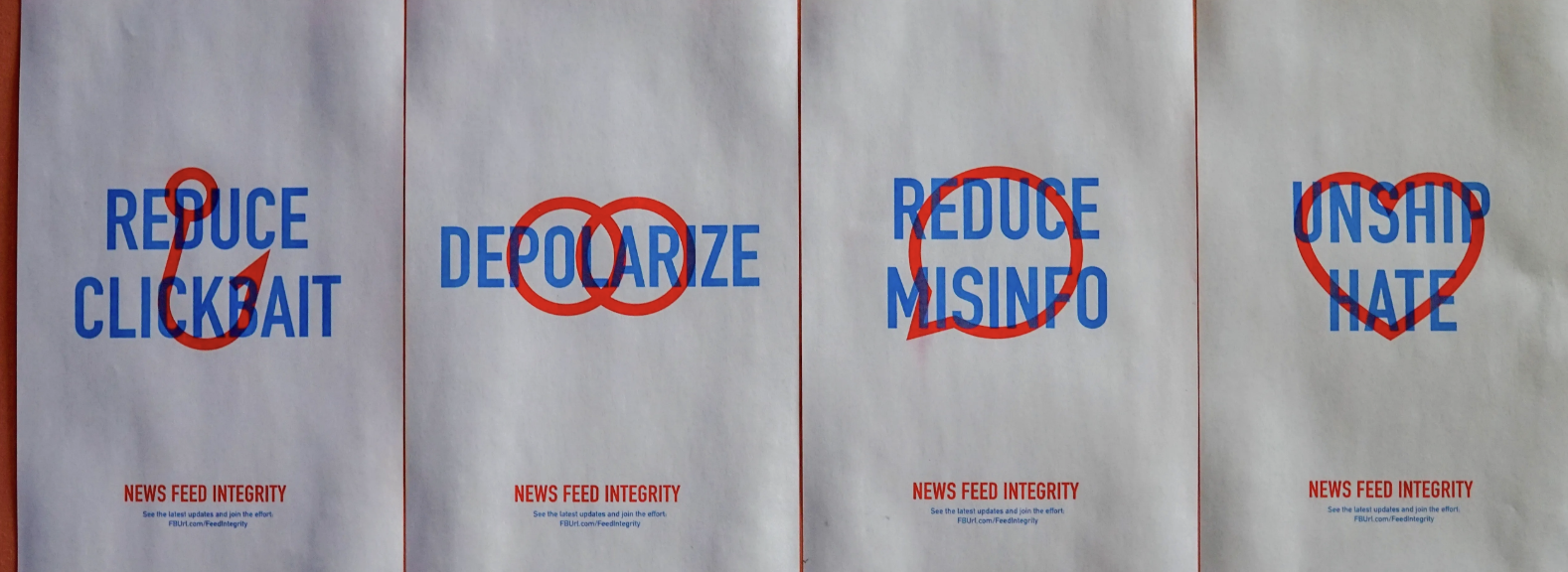

Posters that were hanging at Facebook HQ in 2018. Image: Jason Koebler

Posters that were hanging at Facebook HQ in 2018. Image: Jason Koebler

My time at Facebook was full of little moments like this. I had a hard time squaring the incredibly often thoughtful ways that Facebook employees were trying to solve incredibly difficult problems with the horrendous outcomes we were seeing all over the world. Posters around HQ read "REDUCE CLICKBAIT," "DEPOLARIZE," "REDUCE MISINFO," and "UNSHIP HATE." Yet much of what I saw on Facebook at the time and to this day are, well, all of those things. Other posters talked about having respect for employees, as I wrote about a workforce that was largely made up of low-wage contractors around the world whose job was to look at terrorism videos, hate speech, graphic sexual content, etc. When I asked a Facebook executive about what it was doing to support the mental health needs of its content moderators and to help them deal with PTSD, the Facebook executive in charge of content moderator training at the time told me that they had designed "actual physical environments" in its offices where traumatized employees could "just kind of chillax or, if you want to go play a game, or if you want to just walk away, you know, be by yourself."

The biggest question I had for years after this experience was: Does Facebook know what it's actually doing to the world? Do they care?

In the years since, I have written dozens of articles about Facebook and Mark Zuckerberg, have talked to dozens of employees, and have been leaked internal documents and meetings and screenshots. Through all of this, I have thought about the ethics of working at Facebook, namely the idea that you can change a place that does harm like this "from the inside," and how people who work there make that moral determination for themselves. And I have thought about what Facebook cares about, what Mark Zuckerberg cares about, and how it got this way.

Mostly, I have thought about whether there is any underlying tension or concern about what Facebook is doing and has done to the world; whether its "values," to the extent a massive corporation has values, extend beyond "making money," "amassing power," "growing," "crushing competition," "avoiding accountability," and "stopping regulation." Basically, I have spent an inordinate amount of time wondering to myself if these people care about anything at all.

Careless People , by Sarah Wynn-Williams, is the book about Facebook that I didn't know I had been waiting a decade to read. It's also, notably, a book that Facebook does not want you to read; Wynn-Williams is currently under a gag order from a third-party arbitrator that prevents her from promoting or talking about the book because Facebook argued that it violates a non-disparagement clause in her employment contract.

Wynn-Williams worked at Facebook between 2011 and 2017, rising to become the director of public policy, a role she originally pitched as being Facebook's "diplomat," and ultimately became a role where she did a mix of setting up meetings between world leaders and Mark Zuckerberg and Sheryl Sandberg, determined the policy and strategy for these meetings, and flew around the world meeting with governments trying to prevent them from blocking Facebook.

The reason the book feels so important and cathartic is because, as a memoir, it does something that reported books about Facebook can't quite do. It follows Wynn-Williams' interior life as she recounts what drew her to Facebook (the opportunity to influence politics at a global scale beyond what she was able to do at the United Nations), the strategies and acts she made for the company (flying to Myanmar by herself to meet with the junta to get it unblocked there, for example), and her discoveries and ultimate disillusionment with the company as she goes on what often feels like repeated Veep-like quests to get Mark Zuckerberg to take interactions with world leaders seriously, to engineer a "spontaneous" interaction with Xi Jinping, to get him or Sandberg to care about the role Facebook played in getting Trump and other autocrats elected.

Facebook HQ. Image: Jason Koebler

Facebook HQ. Image: Jason Koebler

She was in many of the rooms where big decisions were made, or at least where the fallout of many of Facebook's largest scandals were discussed. If you care about how Facebook has impacted the world at all, the book is worth reading for the simple reason that it shows, repeatedly, that Mark Zuckerberg and Facebook as a whole Knew. About everything. And when they didn't know but found out, they sought to minimize or slow play solutions.

Yes, Facebook lied to the press often, about a lot of things; yes, Internet.org (Facebook's strategy to give "free internet to people in the developing world) was a cynical ploy at getting new Facebook users; yes, Facebook knew that it couldn't read posts in Burmese and didn't care; yes, it slow-walked solutions to its moderation problems in Myanmar even after it knew about them; yes, Facebook bent its own rules all the time to stay unblocked in specific countries; yes, Facebook took down content at the behest of China then pretended it was an accident and lied about it; yes, Mark Zuckerberg and Sheryl Sandberg intervened on major content moderation decisions then implied that they did not. Basically, it confirmed my priors about Facebook, which is not a criticism because reporting on this company and getting anything beyond a canned statement or carefully rehearsed answer from them over and over for years and years and years has made me feel like I was going crazy. Careless People confirmed that I am not.

[Content truncated due to length...]

From 404 Media via this RSS feed

Man... this sounds like a really rough, but informative read.