this post was submitted on 05 Feb 2024

661 points (88.2% liked)

Memes

50362 readers

1217 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

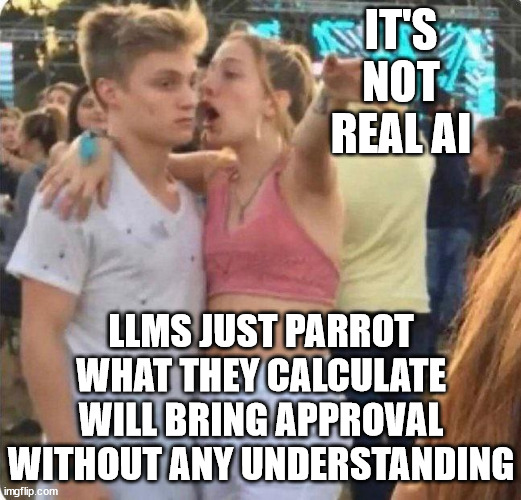

The difference is that you can throw enough bad info at it that it will start paroting that instead of factual information because it doesn't have the ability to criticize the information it receives whereas an human can be told that the sky is purple with orange dots a thousand times a day and it will always point at the sky and tell you "No."

Ha ha yeah humans sure are great at not being convinced by the opinions of other people, that's why religion and politics are so simple and society is so sane and reasonable.

Helen Keller would belive you it's purple.

If humans didn't have eyes they wouldn't know the colour of the sky, if you give an ai a colour video feed of outside then it'll be able to tell you exactly what colour the sky is using a whole range of very accurate metrics.

How come all LLMs keep inventing facts and telling false information then?

People do that too, actually we do it a lot more than we realise. Studies of memory for example have shown we create details that we expect to be there to fill in blanks and that we convince ourselves we remember them even when presented with evidence that refutes it.

A lot of the newer implementations use more complex methods of fact verification, it's not easy to explain but essentially it comes down to the weight you give different layers. GPT 5 is already training and likely to be out around October but even before that we're seeing pipelines using LLM to code task based processes - an LLM is bad at chess but could easily install stockfish in a VM and beat you every time.