this post was submitted on 07 Mar 2025

273 points (97.6% liked)

Buy European

2191 readers

3548 users here now

Overview:

The community to discuss buying European goods and services.

Related Communities:

Buy Local:

Buying and Selling:

Boycott:

Banner credits: BYTEAlliance

founded 1 month ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

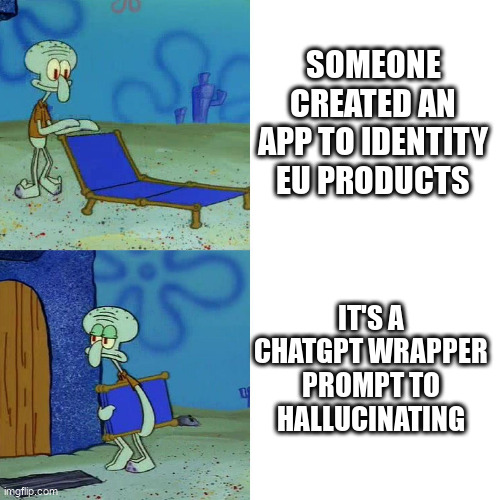

Devs are aware. This was a quick n dirty prototype and they alright knew the issue with using chatgpt. They did it to make something work asap. In an interview (Danish) the devs recognized this and is moving toward using a LLM developed in French (I forget the name but irrelevant to the point that they will drop chatgpt).

If that's their solution, then they have absolutely no understanding of the systems they're using.

ChatGPT isn't prone to hallucination because it's ChatGPT, it's prone because it's an LLM. That's a fundamental problem common to all LLMs

phi-4 is the only one I am aware of that was deliberately trained to refuse instead of hallucinating. it's mindblowing to me that that isn't standard. everyone is trying to maximize benchmarks at all cost.

I wonder if diffusion LLMs will be lower in hallucinations, since they inherently have error correction built into their inference process

Even that won't be truly effective. It's all marketing, at this point.

The problem of hallucination really is fundamental to the technology. If there's a way to prevent it, it won't be as simple as training it differently