this post was submitted on 29 Sep 2024

471 points (97.2% liked)

People Twitter

5520 readers

1692 users here now

People tweeting stuff. We allow tweets from anyone.

RULES:

- Mark NSFW content.

- No doxxing people.

- Must be a pic of the tweet or similar. No direct links to the tweet.

- No bullying or international politcs

- Be excellent to each other.

- Provide an archived link to the tweet (or similar) being shown if it's a major figure or a politician.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

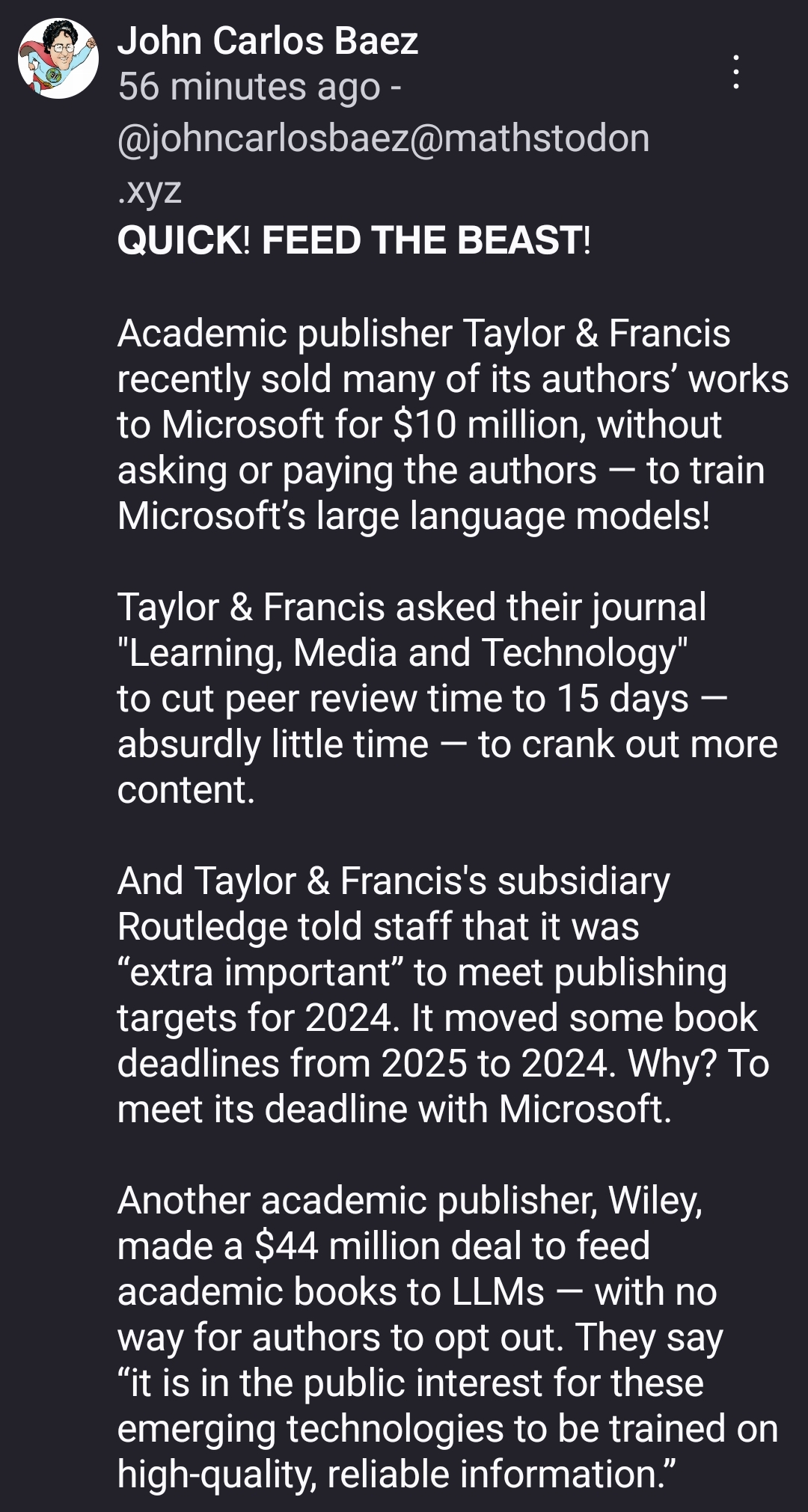

Speaking of fearmongering, you note that:

Ignoring the false equivalency between getting inspiration at an art gallery and feeding millions of artworks into a non-human AI for automated, high-speed, dubious-legality replication and derivation, copyright is how creative workers retain their careers and find incentivization. Your Twitter experiences are anecdotal; in more generalized reality:

The above four points were taken from the Proceedings of the 2023 AIII/ACM Conference on AI, Ethics, and Society (Jiang et al., 2023, section 4.1 and 4.2).

Help me understand your viewpoint. Is copyright nonsensical? Are we hypocrites for worrying about the ways our hosts are using our produced goods? There is a lot of liability and a lot of worry here, but I'm having trouble reconciling: you seem to be implying that this liability and worry are unfounded, but evidence seems to point elsewhere.

Thanks for talking with me! ^ᴗ^

(Comment 2/2)