this post was submitted on 30 Jun 2024

562 points (98.1% liked)

Programmer Humor

20953 readers

2852 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

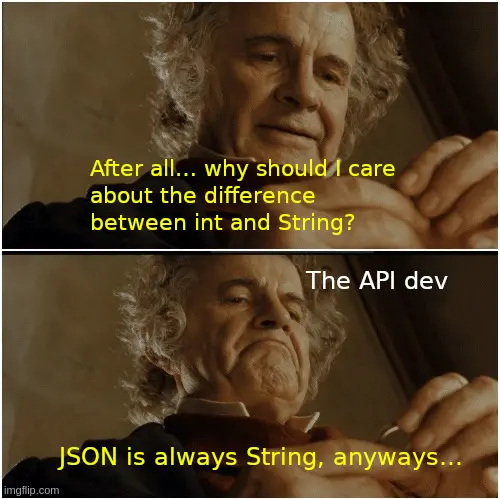

To whoever does that, I hope that there is a special place in hell where they force you to do type safe API bindings for a JSON API, and every time you use the wrong type for a value, they cave your skull in.

Sincerely, a frustrated Rust dev

"Hey, it appears to be int most of the time except that one time it has letters."

throws keyboard in trash

Rust has perfectly fine tools to deal with such issues, namely enums. Of course that cascades through every bit of related code and is a major pain.

Sadly it doesn't fix the bad documentation problem. I often don't care that a field is special and either give a string or number. This is fine.

What is not fine, and which should sentence you to eternal punishment, is to not clearly document it.

Don't you love when you publish a crate, have tested it on thousands of returned objects, only for the first issue be "field is sometimes null/other type?". You really start questioning everything about the API, and sometimes you'd rather parse it as

serde::Valueand call it a day.API is sitting there cackling like a mad scientist in a lightning storm.

True, and also true.

This man has interacted with SAP.

The worst thing is: you can't even put an int in a json file. Only doubles. For most people that is fine, since a double can function as a 32 bit int. But not when you are using 64 bit identifiers or timestamps.

That’s an artifact of JavaScript, not JSON. The JSON spec states that numbers are a sequence of digits with up to one decimal point. Implementations are not obligated to decode numbers as floating point. Go will happily decode into a 64-bit int, or into an arbitrary precision number.

What that means is that you cannot rely on numbers in JSON. Just use strings.

Unless you're dealing with some insanely flexible schema, you should be able to know what kind of number (int, double, and so on) a field should contain when deserializing a number field in JSON. Using a string does not provide any benefits here unless there's some big in your deserialzation process.

What's the point of your schema if the receiving end is JavaScript, for example? You can convert a string to BigNumber, but you'll get wrong data if you're sending a number.

I'm not following your point so I think I might be misunderstanding it. If the types of numbers you want to express are literally incapable of being expressed using JSON numbers then yes, you should absolutely use string (or maybe even an object of multiple fields).

The point is that everything is expressable as JSON numbers, it's when those numbers are read by JS there's an issue

Can you give a specific example? Might help me understand your point.

I am not sure what could be the example, my point was that the spec and the RFC are very abstract and never mention any limitations on the number content. Of course the implementations in the language will be more limited than that, and if limitations are different, it will create dissimilar experience for the user, like this: Why does JSON.parse corrupt large numbers and how to solve this

This is what I was getting at here https://programming.dev/comment/10849419 (although I had a typo and said big instead of bug). The problem is with the parser in those circumstances, not the serialization format or language.

I disagree a bit in that the schema often doesn't specify limits and operates in JSON standard's terms, it will say that you should get/send a number, but will not usually say at what point will it break.

This is the opposite of what C language does, being so specific that it is not even turing complete (in a theoretical sense, it is practically)

Then the problem is the schema being under specified. Take the classic pet store example. It says that the I'd is int64. https://petstore3.swagger.io/#/store/placeOrder

If some API is so underspecified that it just says "number" then I'd say the schema is wrong. If your JSON parser has no way of passing numbers as arbitrary length number types (like BigDecimal in Java) then that's a problem with your parser.

I don't think the truly truly extreme edge case of things like C not technically being able to simulate a truly infinite tape in a Turing machine is the sort of thing we need to worry about. I'm sure if the JSON object you're parsing is some astronomically large series of nested objects that specifications might begin to fall apart too (things like the maximum amount of memory any specific processor can have being a finite amount), but that doesn't mean the format is wrong.

And simply choosing to "use string instead" won't solve any of these crazy hypotheticals.

Underspecified schema is indeed a problem, but I find it too common to just shrug it off

Also, you're very right that just using strings will not improve the situation 🤝

What makes you think so?

https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/parse

Because no one is using JSON.parse directly. Do you guys even code?

It’s neither JSON’s nor JavaScript’s fault that you don’t want to make a simple function call to properly deserialize the data.

It's not up to me. Or you.

You HAVE to. I am a Rust dev too and I'm telling you, if you don't convert numbers to strings in json, browsers are going to overflow them and you will have incomprehensible bugs. Json can only be trusted when serde is used on both ends

This is understandable in that use case. But it's not everyday that you deal with values in the range of overflows. So I mostly assumed this is fine in that use case.

Relax, it's just JSON. If you wanted to not be stringly-typed, you'd have not used JSON.

(though to be fair, I hate it when people do bullshit types, but they got a point in that you ought to not use JSON in the first place if it matters)

As if I had a choice. Most of the time I'm only on the receiving end, not the sending end. I can't just magically use something else when that something else doesn't exist.

Heck, even when I'm on the sending end, I'd use JSON. Just not bullshit ones. It's not complicated to only have static types, or having discriminant fields

Well, apart from float numbers and booleans, all other types can only be represented by a string in JSON. Date with timezone? String. BigNumber/Decimal? String. Enum? String. Everything is a string in JSON, so why bother?

I got nothing against other types. Just numbers/misleading types.

Although, enum variants shall have a label field for identification if they aren't automatically inferable.

Well, the issue is that JSON is based on JS types, but other languages can interpret the values in different ways. For example, Rust can interpret a number as a 64 bit int, but JS will always interpret a number as a double. So you cannot rely on numbers to represent data correctly between systems you don't control or systems written in different languages.

No problem with strings in JSON, until some smart developer you get JSONs from decides to interchangeably use String and number, and maybe a boolean (but only

false) to show that the value is not set, and of coursenullfor a missing value that was supposed to be optional all along but go figure that it was