Singularity | Artificial Intelligence (ai), Technology & Futurology

96 readers

1 users here now

About:

This sublemmy is a place for sharing news and discussions about artificial intelligence, core developments of humanity's technology and societal changes that come with them. Basically futurology sublemmy centered around ai but not limited to ai only.

Rules:

- Posts that don't follow the rules and don't comply with them after being pointed out that they break the rules will be deleted no matter how much engagement they got and then reposted by me in a way that follows the rules. I'm going to wait for max 2 days for the poster to comply with the rules before I decide to do this.

- No Low-quality/Wildly Speculative Posts.

- Keep posts on topic.

- Don't make posts with link/s to paywalled articles as their main focus.

- No posts linking to reddit posts.

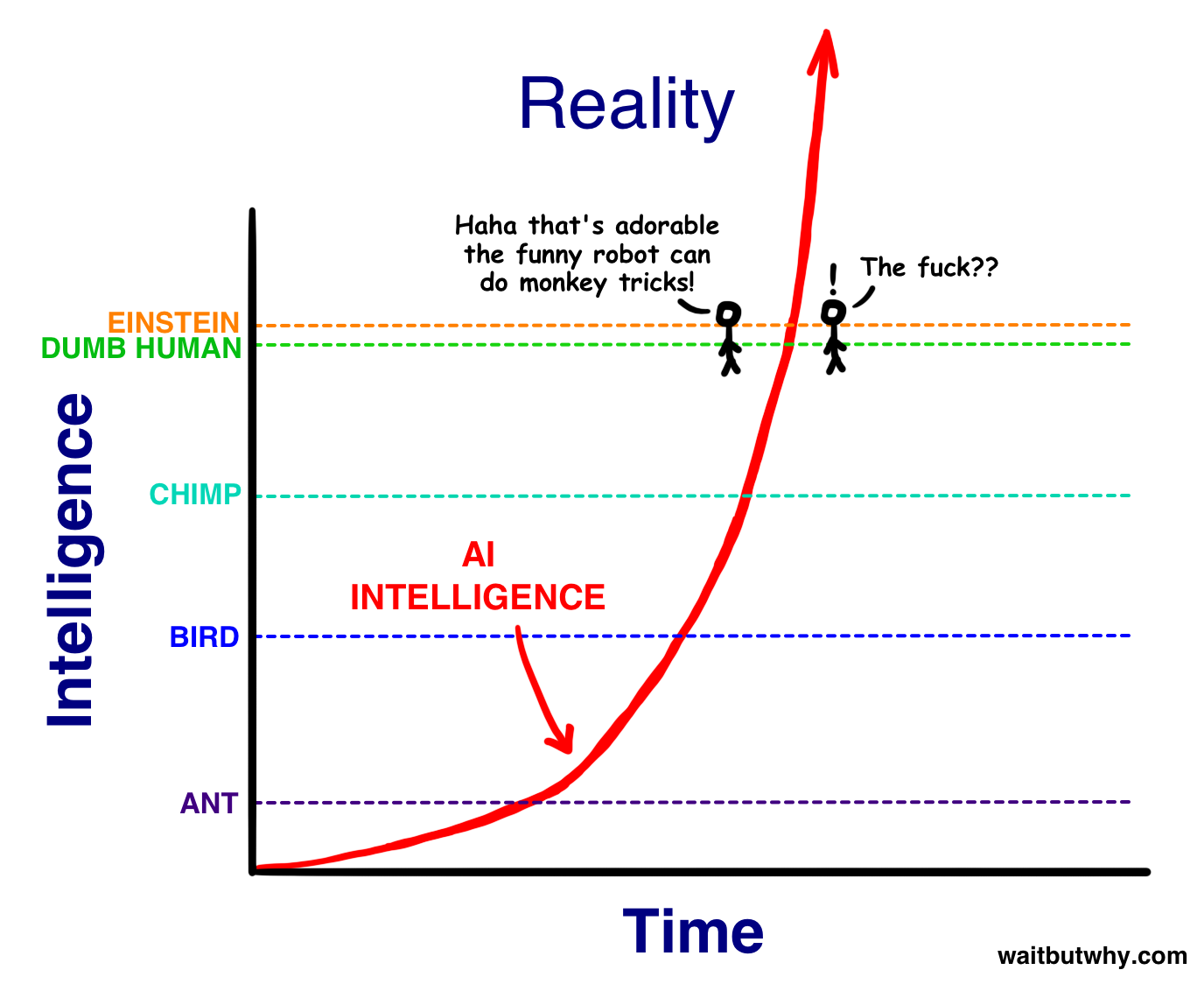

- Memes are fine as long they are quality or/and can lead to serious on topic discussions. If we end up having too much memes we will do meme specific singularity sublemmy.

- Titles must include information on how old the source is in this format dd.mm.yyyy (ex. 24.06.2023).

- Please be respectful to each other.

- No summaries made by LLMs. I would like to keep quality of comments as high as possible.

- (Rule implemented 30.06.2023) Don't make posts with link/s to tweets as their main focus. Melon decided that the content on the platform is going to be locked behind login requirement and I'm not going to force everyone to make a twitter account just so they can see some news.

- No ai generated images/videos unless their role is to represent new advancements in generative technology which are not older that 1 month.

- If the title of the post isn't an original title of the article or paper then the first thing in the body of the post should be an original title written in this format "Original title: {title here}".

- Please be respectful to each other.

Related sublemmies:

[email protected] (Our community focuses on programming-oriented, hype-free discussion of Artificial Intelligence (AI) topics. We aim to curate content that truly contributes to the understanding and practical application of AI, making it, as the name suggests, “actually useful” for developers and enthusiasts alike.)

Note:

My posts on this sub are currently VERY reliant on getting info from r/singularity and other subreddits on reddit. I'm planning to at some point make a list of sites that write/aggregate news that this subreddit is about so we could get news faster and not rely on reddit as much. If you know any good sites please dm me.

founded 1 year ago

MODERATORS

51

52

53

54

56

57

58

59

60

61

63

64

65

14

How elite schools like Stanford became fixated on the AI apocalypse (article from 5.07.2023)

(www.washingtonpost.com)

66

67

68

69

70

15

71

72

73

74

75