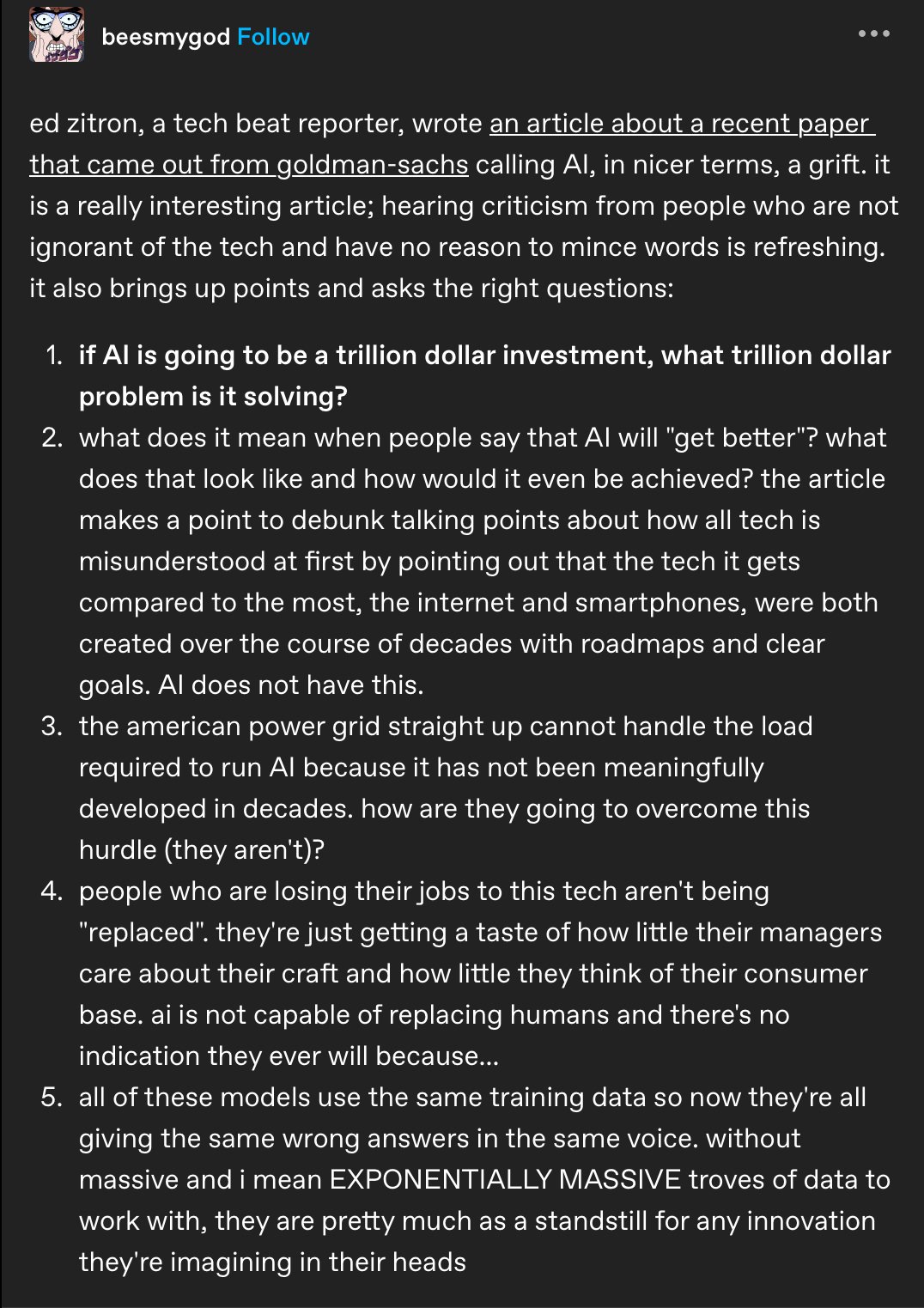

Ok but point 4 is a bit too based for GS.

Tho I have been arguing that at some point ("voluntary") consumption just collapses over average sentiment. Eg over bad living and working conditions, or just a hopelessly depressive environment (like, I don't wanna buy slave chocolate).

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

Nice now i've read a post about an article about a paper by goldman-sachs, see you later if i find the original paper, otherwise there's nothing really to discuss.

The answer to question 1, to me, seems to be that it is promising to replace workforce acrossamy fields. This idea makes investors and Capitalists (capital C, the people who have and want to keep the money) drool. AI promises to do jobs without needing a paycheck.

I'm not saying I believe it will deliver. I'm saying it is being promised, or at least implied. Therefore, I agree, there's a lot of grift happening. Just like crypto and NFTs, grift grift grift.

It’s a familiar con.

It’s just that this time they’re firing people first, then failing to make it work.

Yeah, that seems to be the end goal, but Goldman Sachs themselves tried using AI for a task and found it cost six times as much as paying a human.

That cost isn't going down--by any measure, AI is just getting more expensive as our only strategy for improving it seems to be "throw more compute, data, and electricity at the problem." Even new AI chips are promising increased performance but with more power draw, and everybody developing AI models seem to be taking the stance of just maximizing performance and damn everything else. So even if AI somehow delivers on its promises and replaces every white collar job, it's not going to save any actual money for corporations.

Granted, companies may be willing to eat that cost at least temporarily in order to suppress labor costs (read: pay us less), but it's not a long term solution

You have a tl;dr of this?

Edit: this was supposed to be a joke.

Seriously? It'll take you a minute to read, if you read slowly.

I can summarise it with AI:

Ed Zitron, a tech beat reporter, criticizes a recent paper from Goldman Sachs, calling AI a "grift." The article raises questions about the investment, the problem it solves, and the promise of AI getting better. It debunks misconceptions about AI, pointing out that AI has not been developed over decades and the American power grid cannot handle the load required for AI. The article also highlights that AI is not capable of replacing humans and that AI models use the same training data, making them a standstill for innovation.

Point 2 and 3 are legit, especially the part about not having a roadmap, a lot of what's going on is pure improvisation at this point and trying different things to see what sticks. The grid is a problem but fixing it is long over due. In any case, these companies will just build their own if the government can't get its head out of it's ass and start fixing the problem (Microsoft is already doing this).

The last two point specifically point to this person being someone that doesn't know the technology just like what they are accusing others of being.

It's already replacing people. You don't need it to do all the work, it will still bring about layoffs if it gives the ability for one person to do the job of 5. It's already affecting jobs like concept artist and every website that used to have someone at the end of their chat app now has an LLM. This is also only the start, it's the equivalent of people thinking computers won't affect the workforce in the early 90s. It won't hold up for long.

The data point is also quit a bold statement. Anyone keeping abreast with the technology knows that it's now about curating the datasets and not augmenting them. There's also a paper that comes out everyday about new training strategies which is helping a lot more than a few extra shit posts from Reddit.

I can answer one of these criticisms regarding innovation: AI is incredibly inefficient at what it does. From training to execution, it's but a fraction as efficient as it could be. For this reason most of the innovation going on in AI right now is related to improving efficiency.

We've already had massive improvements to things like AI image generation (e.g. SDXL Turbo which can generate an image in 1 second instead of 10) and there's new LLMs coming out all the time that are a fraction of the size of their predecessors, use a fraction of the computing power, and yet perform better for most use cases.

There's other innovations that have the potential to reduce the power requirements by factors of one thousand to millions such as ternary training and execution. If ternary AI models turn out to be workable in the real-world (I see no reason why they couldn't) we'll be able to get the equivalent of ChatGPT 4 running locally on our phones and it won't even be a blip on the radar from a battery life perspective nor will it require more powerful CPUs/GPUs.

As usual a critic of novel tech gets some things right and some things wrong, but overall not bad. Trying to build a critic of LLMs where your understanding is based on a cartoon representation skipping the technical details about what is novel about the approach and only judging based on how commercial products are using it can be an overly narrow lens to what it can be, but isn't too far off from what it is.

I suspect LLMs or something like them will be a part of something approaching AGI, and the good part is once the tech exists you don't have to reinvent it and can test it's boundaries and how it would integrate with other systems, but if that is 1%, 5%, or 80% of an overall solution is unknown.

More data won't make a difference. Differences will still happen. Training is what matters, and what demands nation-state levels of power. You can run some very fancy models right on your dang telephone. I think you could implement Not Hotdog on a Game Boy Camera.

The long-term goal remains straight-up sci-fi artificial intelligence. Current models and methods might not get there, but they got us a lot closer in quite a hurry. A lot of cool stuff we were certain could only be done by an intelligent system... yeah, not so much. So once again "AI is whatever hasn't been done." What has been done will be fascinating in video games, movies, and an endless fountain of anime pornography.

Expecting any of that to be worth a trillion dollars is a fairy tale.

The two letters y'all should consistently be mad at are "VC."

They were the problem with cash you can e-mail becoming a speculation disaster, they were the problem with digital commemorative plates becoming an industry comprised entirely of scams, they are the problem with every company's C-suite racing to squander money on using this hot new tech for... something. And at some point they'll move onto the next whiz-bang innovation that's maybe-sorta-kinda useful, and well-meaning people will complain about that thing as if the thing is what's wrong.